Unity AI Guiding Principles

Introduction

Unity AI is a suite of AI tools designed to be the best AI native experience for Unity users, focused on enabling you to learn, get unblocked, be productive, and build unique experiences. A variety of artificial intelligence (AI) models are integrated into the Unity Editor to provide simple AI interfaces, and a single economy for many features. These AI features are found in the Assistant, Generators, and Inference Engine, which you can learn more about here. This page describes the guiding principles that we design around for Unity AI.

Note that Unity AI is currently in a beta testing phase, where we provide free and unlimited use of the services so that Unity can rapidly improve and iterate. We plan to keep making product improvements on a ~monthly basis until we meet certain product quality, user sentiment, and infrastructure readiness requirements, to ensure that the offering is the best it can be before we exit the beta phase. Any feedback, requests, and comments you give during this phase will help us improve Unity AI.

Our three guiding principles for Unity AI are:

- Context awareness and integration

- Data controls and customization

- Curated models

Let’s dive into each of these principles further and illustrate how they appear in the Unity AI experience.

Context awareness and integration

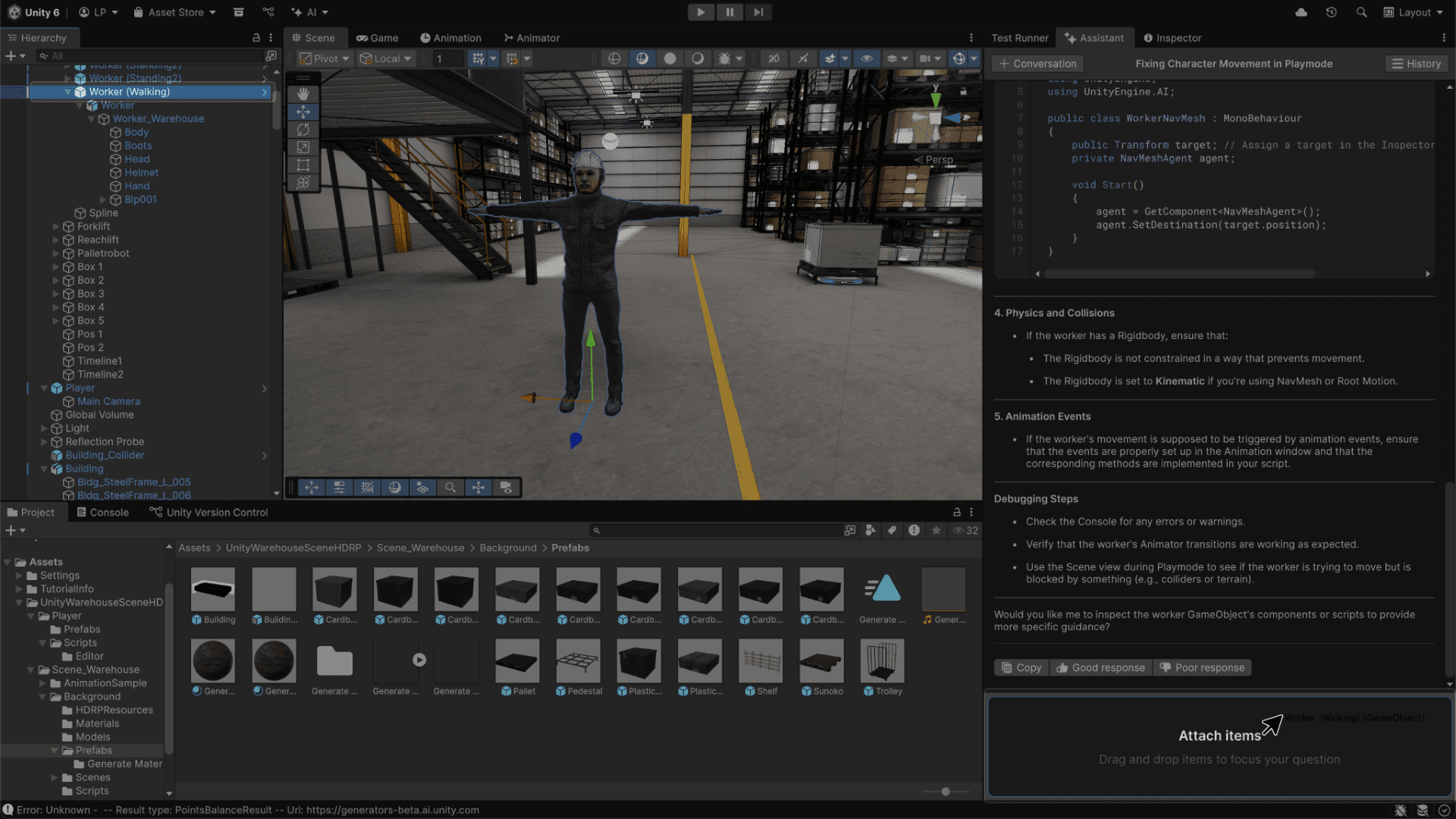

Unity AI is integrated natively in the Unity Editor with a goal of creating the best AI native experience for Unity users. We crafted a Unity-specific LLM pipeline that understands things like your Unity version, Unity-specific structures like GameObjects and prefabs, your render pipeline, and more. You don’t need to shift between applications when using Unity AI, because it exists as a series of native Editor entry points and dockable Editor windows.

It is also uniquely integrated into the Unity Editor front end to allow integrations such as drag and drop context, console error resolution, as well as planned future capabilities like using the visual context of your scene view. We don’t currently index the Unity project on the cloud, but that is something we are considering for the future on an opt-in basis.

Also, because AI generated assets (code, visual assets, etc.) are created in the context of the Unity Editor, we embed “UnityAI” metadata in all generations. This makes them easy to locate from the native Unity search, and therefore easy to manage or remove to comply with third-party rights, AI policies, or due to other commercial considerations when shipping your game.

When custom sprite training models launch later this year, they will be automatically shared with all members of your Unity ID organization. This will make it easier to generate consistently styled assets for your game among all your contributing Unity users without having to worry about account or entitlement access to other services.

Unity AI is also integrated into a number of Unity systems and workflows. We are integrating the pricing model (to buy Unity Points to spend on AI actions) directly into Unity plans (subscriptions). This will make it low-friction to get started, especially if you are on a Unity paid plan, where points will automatically be deposited in your Points balance. The only thing you need to do is install the AI packages and accept applicable terms to use them. More details on the business model are forthcoming.

Points are easy to manage as they are granted to your Unity organization and can be tracked in real-time on the Unity Dashboard. Points can be utilized by all Unity users in your org, making it easy to allow for varied use among many users, use cases, and seasonal changes that naturally happen throughout game development.

If you have ideas for how we can improve the Unity context or systems integrations, please let us know on the public roadmap.

Data controls and customization

Unity AI provides a series of transparent policies and settings that let you control how your data is used (requires active “opt-in”), and allow you to adapt your Unity AI experience to your organization's needs.

The Developer Data framework governs all data used in Unity AI. The important things to know as it relates to AI are that:

- You own your input and output data

- Model training to improve Unity AI is OFF by default

In addition, here are the customization settings and ongoing data that we provide as you use Unity AI:

Unity Dashboard Settings & Data

- Settings

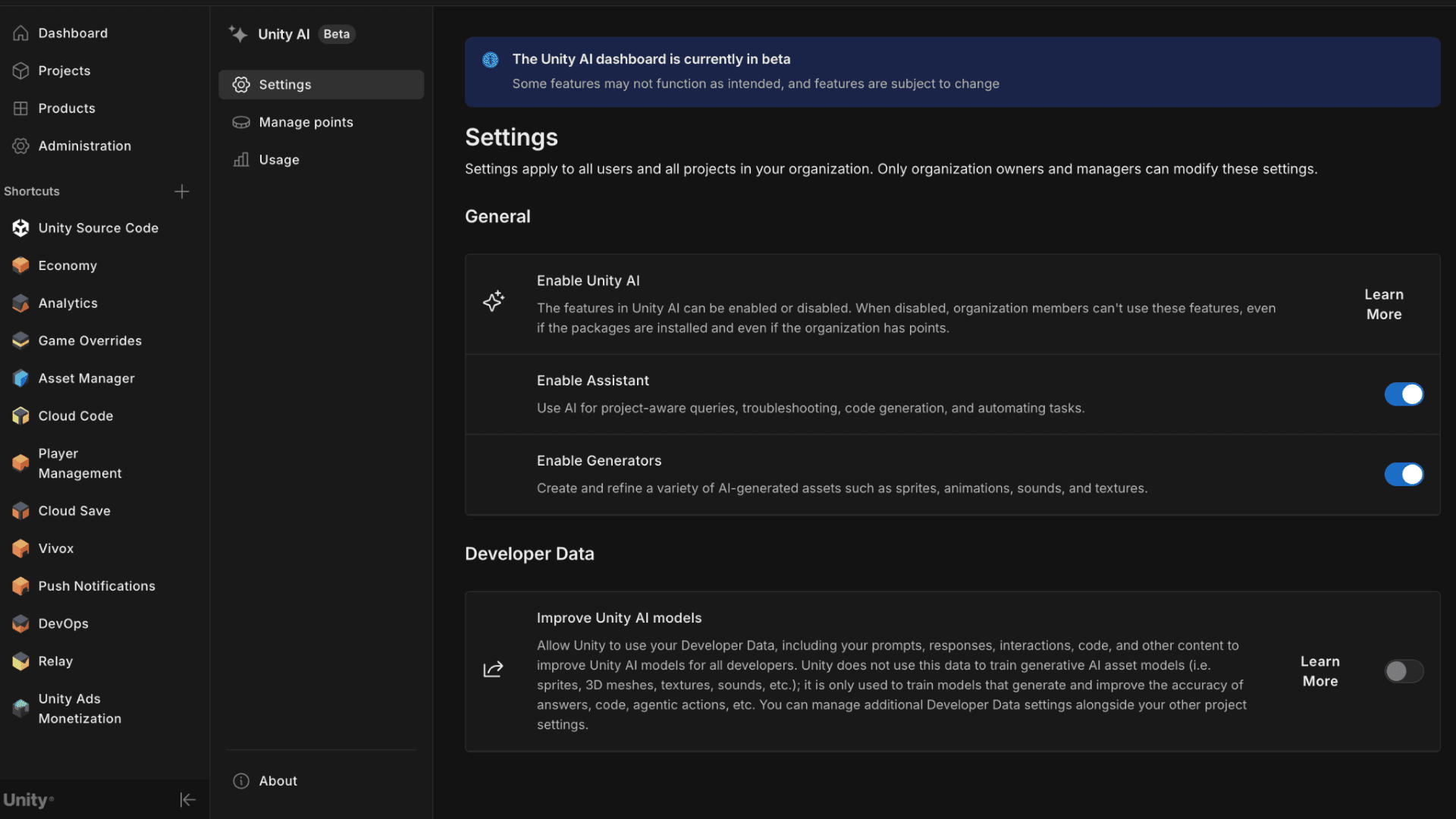

- These settings apply to all users and all projects in your organization and only organization owners and managers can modify them. In the future, we will consider adding optional project-specific, or user-specific settings and data here.

- Enable Unity AI - This setting is ON by default. Features of Unity AI can be enabled or disabled for the organization depending on your company's policies for AI. The separate toggles for the Assistant and Generators can be changed at any time. When disabled, organization members can’t use the features in Unity AI, even if the packages are installed and even if the organization has points. This setting does not affect the expiration or renewal of points, or the availability of the Inference Engine feature.

- Improve Unity AI - This setting is OFF by default. You can allow Unity to use your Developer Data, including your prompts, responses, interactions, code, and other content to improve Unity AI models for all developers. Unity does not use this data to train generative AI asset models (i.e. sprites, 3D meshes, textures, sounds, etc.); it is only used to train models that generate and improve the accuracy of answers, code, agentic actions, etc.

- Usage

- The Usage page within the Unity Dashboard allows you to see usage reporting of Unity AI by query and generation type over time. In the future we’ll provide more nuanced reporting.

- Manage Points

- This page within the Unity Dashboard allows you to see points subscriptions and one-time points purchases, and how many points are remaining. This page will populate with data once Unity AI exits the beta phase, coinciding with all free beta points expiring, and paid points being required to continue use for the Assistant and Generators.

Project Settings

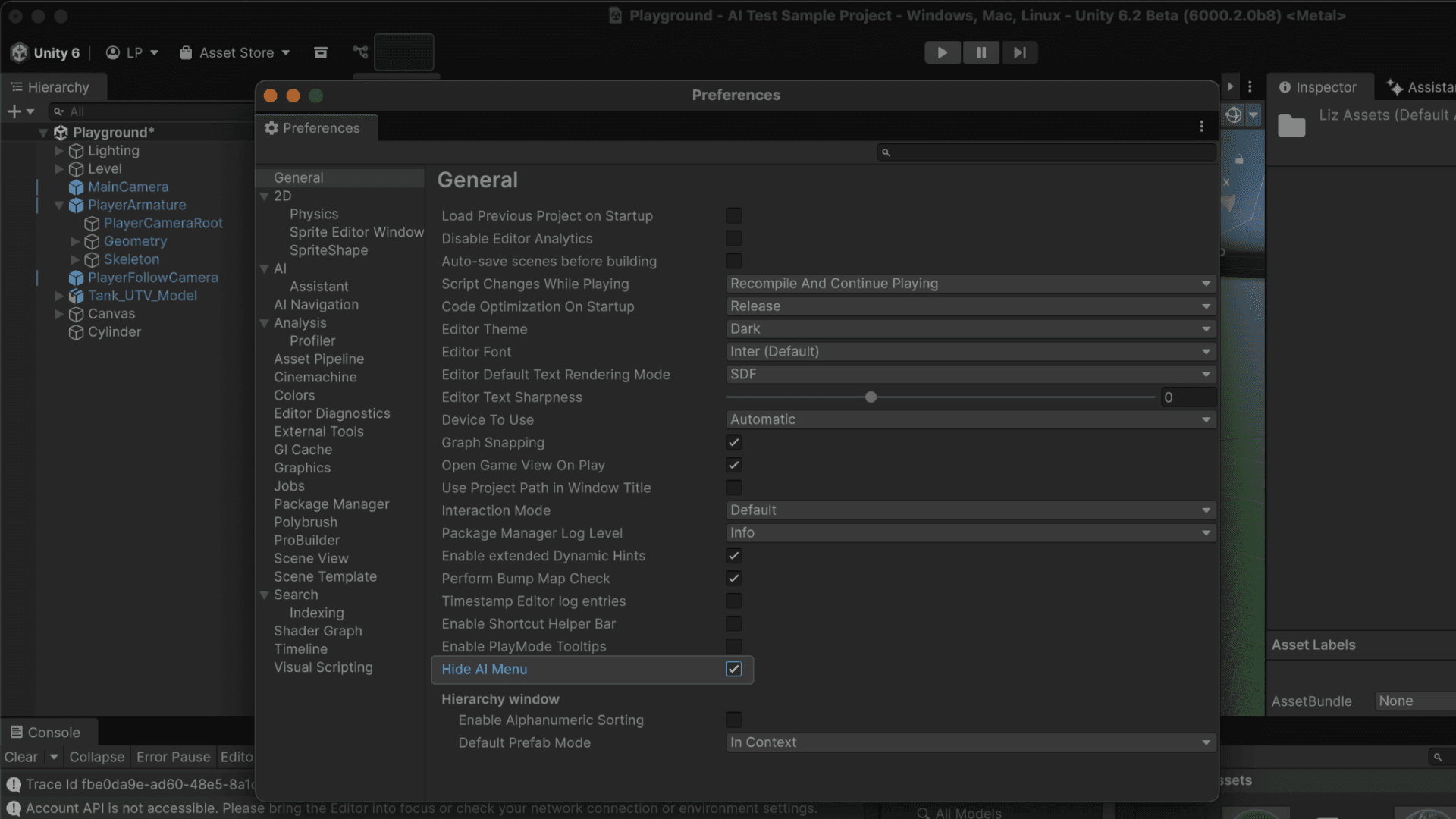

Hide AI menu button - The AI menu button displays by default in the toolbar in Unity Editor version 6.2+. You can optionally hide it with this setting in the Editor General Settings on 6000.2.0b7+.

If you have needs for additional data controls, settings, customizations, please let us know on the public roadmap.

Curated models

Unity AI provides a foundation so that the Unity Editor can be the assembly point AI model for last-mile integration. This single economy for AI means curated models are integrated in one place, so you don’t need to do any setup, subscription management, or tool-switching outside Unity. As a major benefit, you don’t need to subscribe to a stack of various vertical tools with their own credits that may only be needed at specific times of your game production cycle.

We may change and upgrade model providers and models over time to add to the functionalities so you always have the state-of-the-art capabilities without needing to be an AI model expert yourself.

Some integrated models are hosted on Unity’s first-party server infrastructure, and some are hosted on third-party infrastructure and accessed via a partner API (“Partner Models”). Here is a list of all models that are integrated, which may change over time:

Assistant

The Unity AI Assistant uses large language models (LLMs) to answer user questions, generate code, and run agentic actions such as modifying a large number of files.

Generators

The Unity AI Generators use several first-party (Unity) and Partner Models to generate and refine assets. Here are the measures we have taken in working with Partner Model providers:

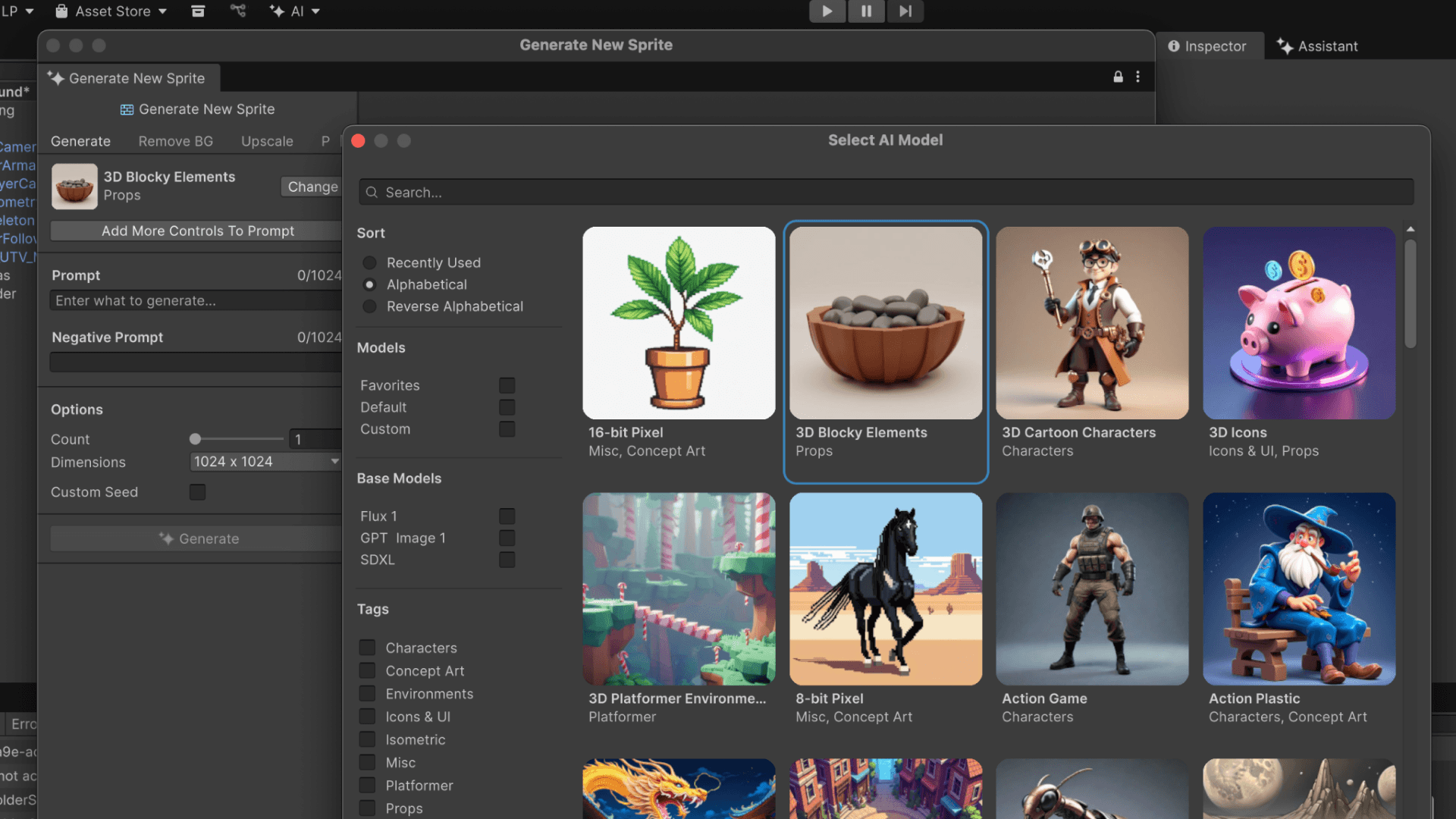

- Provider labels: We indicate which provider offers the Partner Model you are generating from, and, if relevant, the foundation model behind it. This is displayed in the selected model section of all generators, and in the model details page of the model picker view.

- Data ownership: You own all your input and output data when using Unity AI, regardless of the Partner Model that is used.

- Data transfer: We send your anonymized Developer Data, including prompts, reference assets, etc. to these Partner Model providers for the sole purpose of running the services. Your data is deleted by Partner Model providers after generation, except for custom sprite model training where the resulting custom model is saved until you delete it.

- Model training: Partner Model providers do not train their models with your Developer Data, even if you enable “Improve Unity AI” in the Unity cloud dashboard settings.

- Custom models: Unity AI offers custom trained model functionalities to users. When you choose to create a custom trained model, that model is retrained exclusively on the data you upload for that purpose. Only users inside your Unity organization can use this custom trained model, and the data uploaded to retrain the custom model is not used to improve Partner Models.

- Blocks: Certain Partner Models block prompts using text-matching lists, contextual references, vision models, and other means to detect queries that have a probability of generating IP/copyright infringing or otherwise illegal assets. In some cases, this will result in a null response or blank image from an asset generator, or a user message that you must modify your prompt to proceed. In these cases, Unity AI respects the policies of individual Partner Models.

- Traceability: As stated previously, all assets generated are tagged with “Unity AI” metadata, enabling searchability, and therefore traceability. You can easily search, track, and audit AI-generated content across their project, making it easier to identify placeholders and remove generated assets as needed to comply with rights and commercial considerations.

Here are the Partner Models used to power the Generators, which will evolve as new providers, models, and asset types (i.e. 3D mesh, skybox, etc.) are integrated:

Unity users are ultimately responsible for ensuring that their use of Unity AI complies with our acceptable use principles (see our Terms of Service and Unity Services Content Transparency). Importantly, you are responsible for ensuring your use of Unity AI and any generated assets do not infringe on third-party rights and are appropriate for your use. As with any asset used in a Unity project, it remains your responsibility to ensure you have the rights to use content in your final build.

If you have ideas for how Unity AI can implement or further promote responsible use of AI, please let us know on the public roadmap.

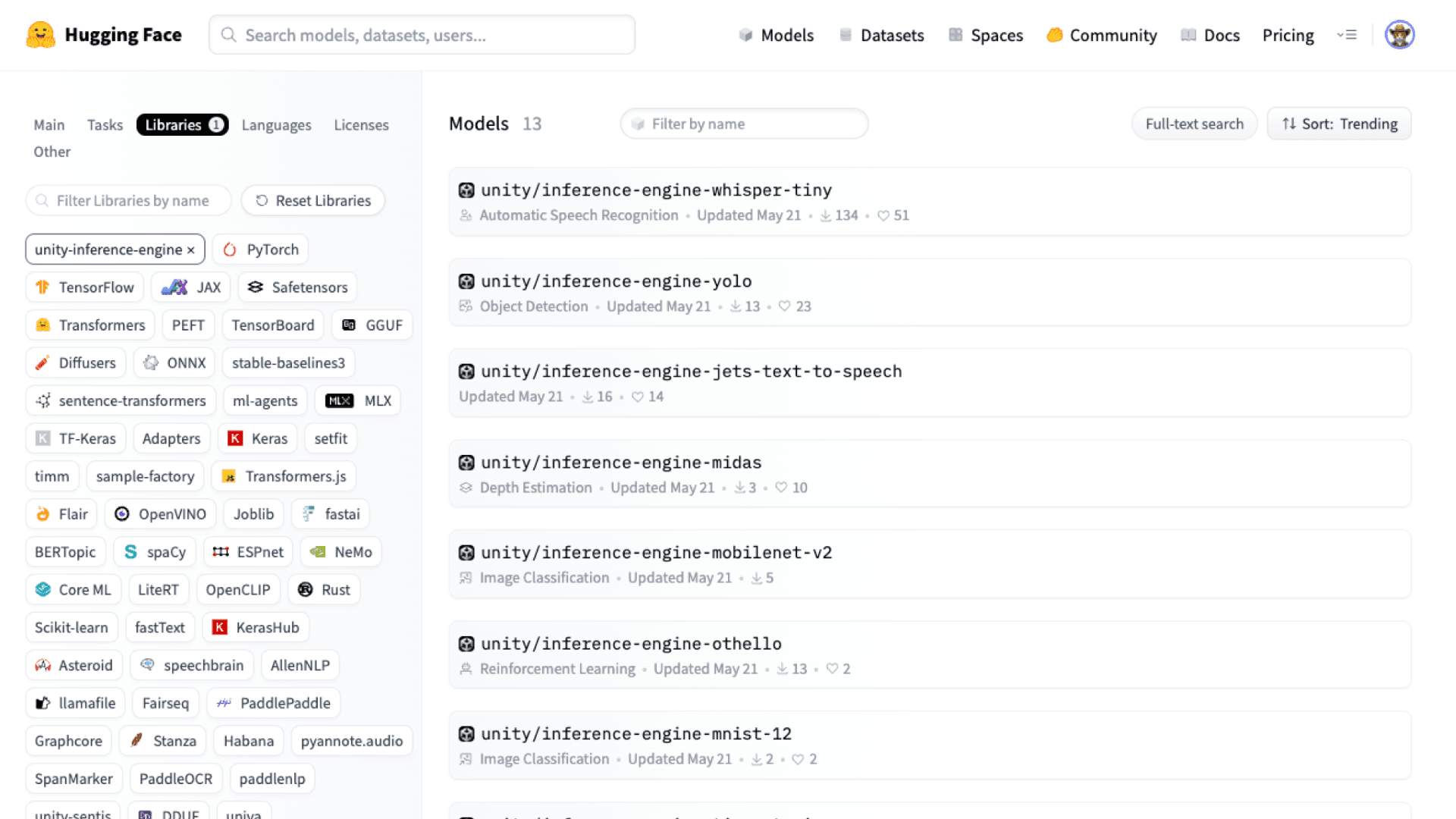

Inference Engine

The Unity AI Inference Engine enables you to run AI models on your local machine in the Unity Editor, or on end-user devices in the Unity runtime. No data from these models are stored or transferred to the cloud. Inference Engine does not include built-in models, but rather allows you to import your own custom pre-trained models, or ones acquired from model gardens like Hugging Face. See our documentation for further information and supported workflows.

If you have ideas for new models that Unity AI should integrate, or new ways we can allow you to integrate AI yourself, please let us know on the public roadmap.

If you have any questions about these product principles, please contact support@unity3d.com.