원활한 성능은 플레이어를 위한 몰입감 넘치는 게임 경험을 만드는 데 필수적입니다. 게임이 최적화되도록 하려면 일관된 엔드 투 엔드 프로파일링 워크플로우가 필수이며, 이는 간단한 세 가지 절차로 시작됩니다:

이 페이지는 게임 개발자를 위한 일반적인 프로파일링 워크플로우를 설명합니다. 이 내용은 유니티 게임 프로파일링에 대한 궁극적인 가이드 무료로 다운로드 가능 (가이드의 유니티 6 버전은 곧 제공될 예정입니다)에서 발췌한 것입니다. 이 전자책은 게임 개발, 프로파일링 및 최적화 분야의 외부 및 내부 유니티 전문가들이 공동으로 작성했습니다.

이 기사에서는 프로파일링을 통해 설정할 수 있는 유용한 목표, CPU 바운드 또는 GPU 바운드와 같은 일반적인 성능 병목 현상, 그리고 이러한 상황을 더 자세히 식별하고 조사하는 방법에 대해 배울 수 있습니다.

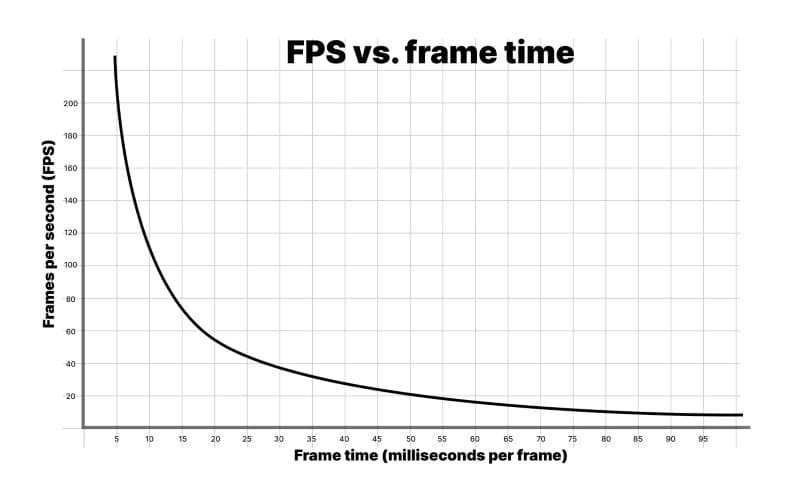

게이머는 일반적으로 프레임 속도 또는 초당 프레임(fps)을 사용하여 성능을 측정하지만, 개발자로서 일반적으로 밀리초 단위의 프레임 시간을 사용하는 것이 권장됩니다. 다음의 단순화된 시나리오를 고려해 보십시오:

런타임 동안, 귀하의 게임은 0.75초에 59프레임을 렌더링합니다. 그러나 다음 프레임을 렌더링하는 데 0.25초가 걸립니다. 60 fps의 평균 제공 프레임 속도는 좋게 들리지만, 실제로는 마지막 프레임을 렌더링하는 데 0.25초가 걸리기 때문에 플레이어는 스터터 효과를 느낄 것입니다.

이것은 프레임당 특정 시간 예산을 목표로 하는 것이 중요한 이유 중 하나입니다. 이것은 게임을 프로파일링하고 최적화할 때 작업할 수 있는 확고한 목표를 제공하며, 궁극적으로 플레이어에게 더 부드럽고 일관된 경험을 제공합니다.

각 프레임은 목표 fps에 따라 시간 예산을 가집니다. 30 fps를 목표로 하는 애플리케이션은 항상 프레임당 33.33 ms 미만이 되어야 합니다 (1000 ms / 30 fps). 마찬가지로, 60 fps의 목표는 프레임당 16.66 ms를 남깁니다 (1000 ms / 60 fps).

비상호작용 시퀀스 동안에는 이 예산을 초과할 수 있습니다. 예를 들어, UI 메뉴를 표시하거나 장면을 로드할 때는 가능하지만, 게임 플레이 중에는 불가능합니다. 목표 프레임 예산을 초과하는 단일 프레임조차도 끊김을 유발할 것입니다.

참고: VR 게임에서 일관되게 높은 프레임 속도는 플레이어에게 메스꺼움이나 불편함을 유발하지 않도록 하는 데 필수적이며, 종종 플랫폼 보유자로부터 인증을 받기 위해 필요합니다.

초당 프레임 수: 기만적인 지표

게이머들이 성능을 측정하는 일반적인 방법은 프레임 속도 또는 초당 프레임 수입니다. 그러나 대신 밀리초 단위의 프레임 시간을 사용하는 것이 좋습니다. 그 이유를 이해하려면, fps와 프레임 시간의 위 그래프를 보십시오.

이 숫자들을 고려하십시오:

1000 ms/sec / 900 fps = 프레임당 1.111 ms

1000 ms/sec / 450 fps = 프레임당 2.222 ms

1000 ms/sec / 60 fps = 프레임당 16.666 ms

1000 ms/sec / 56.25 fps = 17.777 ms per frame

애플리케이션이 900 fps로 실행되고 있다면, 이는 프레임당 1.111 밀리초의 프레임 시간으로 변환됩니다. 450 fps에서는 프레임당 2.222 밀리초입니다. 프레임 속도가 절반으로 떨어지는 것처럼 보이지만, 프레임당 단 1.111 밀리초의 차이를 나타냅니다.

60 fps와 56.25 fps의 차이를 보면, 각각 프레임당 16.666 밀리초와 17.777 밀리초로 변환됩니다. 이 또한 프레임당 1.111 밀리초의 추가를 나타내지만, 여기서는 프레임 속도의 하락이 비율적으로 훨씬 덜 극적으로 느껴집니다.

이것이 개발자들이 fps보다 게임 속도를 벤치마크하기 위해 평균 프레임 시간을 사용하는 이유입니다.

목표 프레임 속도 아래로 떨어지지 않는 한 fps에 대해 걱정하지 마세요. 게임이 얼마나 빠르게 실행되고 있는지를 측정하기 위해 프레임 시간에 집중하고, 프레임 예산 내에서 유지하세요.

자세한 정보는 원본 기사 "로버트 던롭의 fps 대 프레임 시간"를 읽어보세요.

열 제어는 모바일 장치용 애플리케이션을 개발할 때 최적화해야 할 가장 중요한 영역 중 하나입니다. CPU나 GPU가 비효율적인 코드로 인해 풀 스로틀로 너무 오랫동안 작업하면, 해당 칩이 뜨거워집니다. 과열과 칩 손상을 방지하기 위해 운영 체제는 장치의 클럭 속도를 줄여서 식힐 수 있도록 하여 프레임 스터터링과 불량한 사용자 경험을 초래합니다. 이 성능 저하는 열 스로틀링으로 알려져 있습니다.

더 높은 프레임 속도와 증가된 코드 실행(또는 DRAM 접근 작업)은 배터리 소모와 열 발생을 증가시킵니다. 나쁜 성능은 또한 저사양 모바일 장치의 전체 세그먼트에서 게임을 플레이할 수 없게 만들 수 있으며, 이는 시장 기회를 놓치는 결과를 초래할 수 있습니다.

열 문제를 다룰 때, 시스템 전체 예산으로 작업할 수 있는 예산을 고려하세요.

게임을 처음부터 최적화하기 위해 조기에 프로파일링하여 열 스로틀링과 배터리 소모를 방지하세요. 열 및 배터리 소모 문제를 해결하기 위해 목표 플랫폼 하드웨어에 맞게 프로젝트 설정을 조정하세요.

모바일에서 프레임 예산 조정

장시간 플레이 시 장치의 열 문제를 해결하기 위한 일반적인 팁은 약 35%의 프레임 유휴 시간을 남겨두는 것입니다. 이것은 모바일 칩이 식을 시간을 주고 과도한 배터리 소모를 방지하는 데 도움이 됩니다. 30 fps의 경우 프레임당 목표 프레임 시간이 33.33 ms인 경우 모바일 장치의 프레임 예산은 프레임당 약 22 ms가 됩니다.

계산은 다음과 같습니다: (1000 ms / 30) * 0.65 = 21.66 ms

같은 계산을 사용하여 모바일에서 60 fps를 달성하려면 목표 프레임 시간이 (1000 ms / 60) * 0.65 = 10.83 ms가 필요합니다. 이는 많은 모바일 장치에서 달성하기 어려우며 30 fps를 목표로 할 때보다 배터리를 두 배 더 빨리 소모할 것입니다. 이러한 이유로 많은 모바일 게임은 60 fps보다 30 fps를 목표로 합니다. 이 설정을 제어하려면 Application.targetFrameRate를 사용하고, 프레임 시간에 대한 자세한 내용은 프로파일링 전자책의 "프레임 예산 설정" 섹션을 참조하십시오.

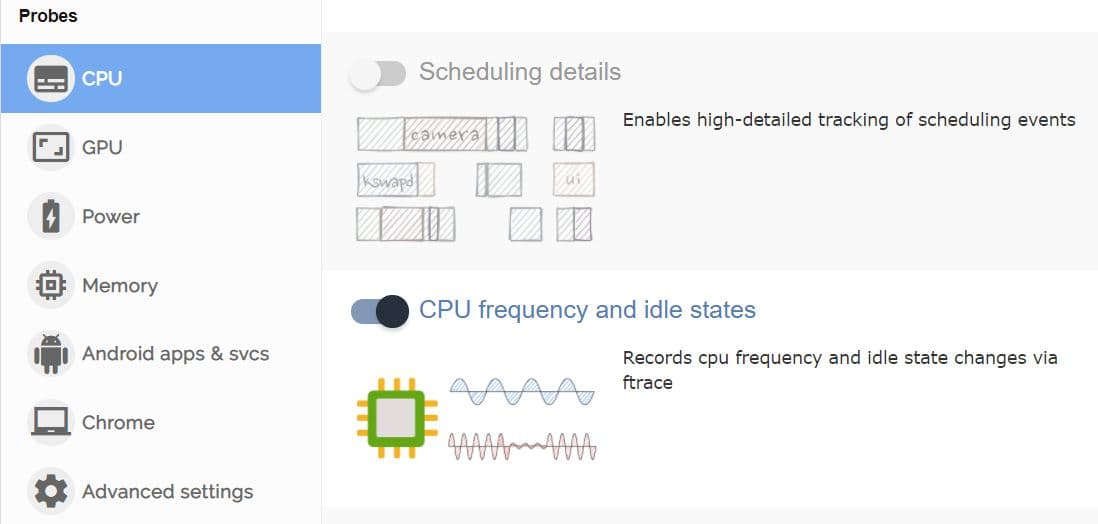

모바일 칩의 주파수 조정은 프로파일링할 때 프레임 유휴 시간 예산 할당을 식별하는 데 어려움을 줄 수 있습니다. 귀하의 개선 및 최적화는 순 긍정적인 효과를 가질 수 있지만, 모바일 장치는 주파수를 낮추고 있을 수 있으며, 그 결과로 더 시원하게 작동할 수 있습니다. 최적화 전후에 모바일 칩 주파수, 유휴 시간 및 스케일링을 모니터링하기 위해 FTrace 또는 Perfetto와 같은 사용자 정의 도구를 사용하십시오.

목표 fps(예: 30 fps의 경우 33.33 ms) 내에서 총 프레임 시간 예산을 유지하고 장치가 이 프레임 속도를 유지하기 위해 덜 작동하거나 낮은 온도를 기록하는 것을 본다면, 올바른 방향으로 가고 있는 것입니다.

모바일 장치의 프레임 예산에 여유를 추가해야 하는 또 다른 이유는 실제 온도 변동을 고려하기 위함입니다. 더운 날에는 모바일 장치가 열을 발생시키고 열을 방출하는 데 어려움을 겪어 열 제한 및 게임 성능 저하로 이어질 수 있습니다. 이 시나리오를 피하기 위해 프레임 예산의 일부를 따로 설정하십시오.

DRAM 접근은 일반적으로 모바일 장치에서 전력을 많이 소모하는 작업입니다. Arm의 모바일 장치의 그래픽 콘텐츠 최적화 조언에 따르면 LPDDR4 메모리 접근은 바이트당 약 100 피코줄의 비용이 듭니다.

프레임당 메모리 접근 작업 수를 줄이십시오:

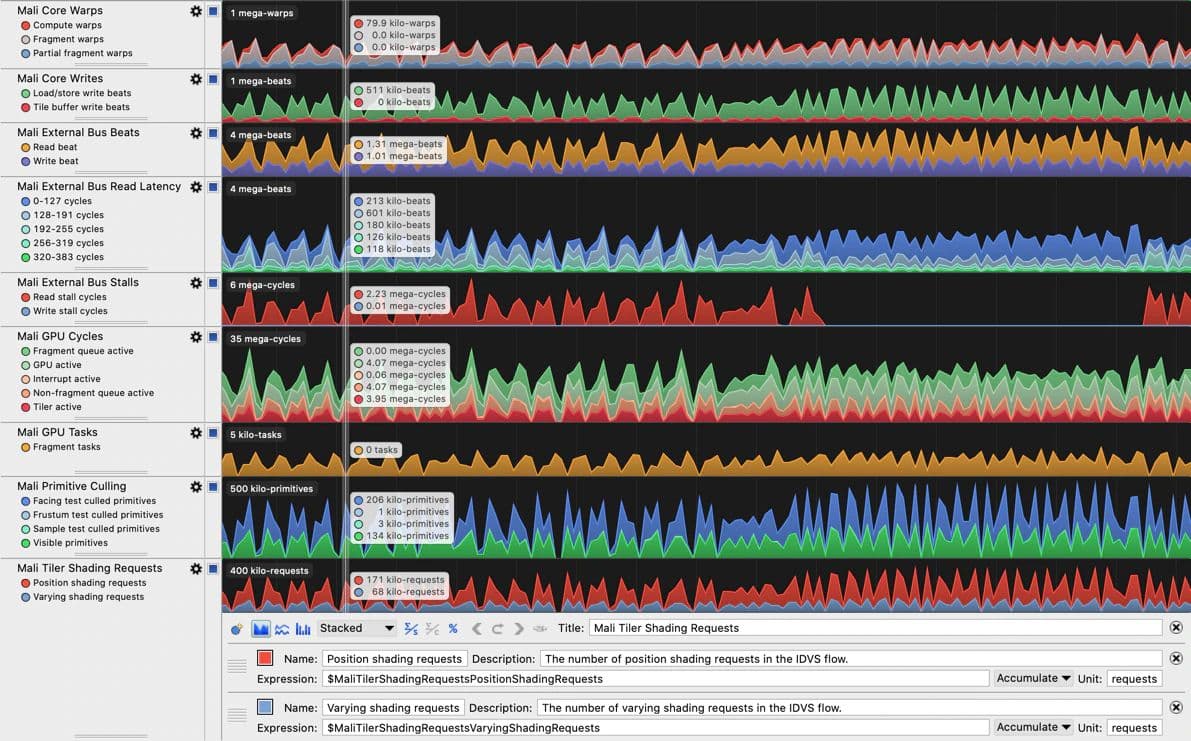

Arm CPU 또는 GPU 하드웨어를 활용하는 장치에 집중해야 할 때, Arm Performance Studio 도구(특히 Streamline Performance Analyzer)는 메모리 대역폭 문제를 식별하기 위한 훌륭한 성능 카운터를 포함합니다. 사용 가능한 카운터는 각 Arm GPU 세대에 대해 해당 사용자 가이드에 나열되고 설명됩니다. 예를 들어, Mali-G710 Performance Counter Reference Guide 입니다. Arm Performance Studio GPU 프로파일링은 Arm Immortalis 또는 Mali GPU가 필요합니다.

벤치마킹을 위한 하드웨어 계층 설정

플랫폼별 프로파일링 도구를 사용하는 것 외에도, 지원하고자 하는 각 플랫폼 및 품질 계층에 대해 계층 또는 최소 사양 장치를 설정한 다음, 이러한 사양 각각에 대해 성능을 프로파일링하고 최적화합니다.

예를 들어, 모바일 플랫폼을 목표로 하는 경우, 목표 하드웨어에 따라 기능을 켜거나 끌 수 있는 품질 제어가 있는 세 가지 계층을 지원하기로 결정할 수 있습니다. 그런 다음 각 계층의 최저 장치 사양에 맞게 최적화합니다. 또 다른 예로, 콘솔용 게임을 개발하는 경우, 구형 및 신형 버전 모두에서 프로파일링을 수행해야 합니다.

우리의 최신 모바일 최적화 가이드에는 게임을 실행하는 모바일 장치의 열 스로틀링을 줄이고 배터리 수명을 늘리는 데 도움이 되는 많은 팁과 요령이 있습니다.

프로파일링할 때, 가장 큰 영향을 미칠 수 있는 영역에 시간과 노력을 집중하는 것이 중요합니다. 따라서 프로파일링 시 상위에서 하위로 접근하는 것이 권장됩니다. 즉, 렌더링, 스크립트, 물리학 및 가비지 수집(GC) 할당과 같은 카테고리에 대한 고수준 개요로 시작합니다. 우려되는 영역을 식별한 후에는 더 깊은 세부 사항으로 들어갈 수 있습니다. 이 고수준 패스를 사용하여 데이터를 수집하고, 원치 않는 관리 할당이나 핵심 게임 루프에서 과도한 CPU 사용을 유발하는 시나리오를 포함하여 가장 중요한 성능 문제에 대한 메모를 작성합니다.

먼저 GC.Alloc 마커에 대한 호출 스택을 수집해야 합니다. 이 프로세스에 익숙하지 않은 경우, 전자책의 "응용 프로그램 수명 동안 반복되는 메모리 할당 찾기"라는 제목의 섹션에서 몇 가지 팁과 요령을 찾으십시오.

보고된 호출 스택이 할당의 출처나 다른 지연을 추적하기에 충분히 상세하지 않은 경우, 할당의 출처를 찾기 위해 심층 프로파일링이 활성화된 두 번째 프로파일링 세션을 수행할 수 있습니다. 우리는 전자책에서 심층 프로파일링에 대해 더 자세히 다루지만 요약하자면, 이는 모든 함수 호출에 대한 세부 성능 데이터를 캡처하여 실행 시간과 동작에 대한 세분화된 통찰력을 제공하는 프로파일러의 모드로, 표준 프로파일링에 비해 상당히 높은 오버헤드를 동반합니다.

프레임 시간 "위반자"에 대한 메모를 수집할 때, 그들이 나머지 프레임과 어떻게 비교되는지 반드시 기록하십시오. 이 상대적 영향은 심층 프로파일링이 활성화되면 왜곡될 수 있습니다. 심층 프로파일링은 모든 메서드 호출에 대한 계측을 통해 상당한 오버헤드를 추가하기 때문입니다.

초기 프로파일링

프로젝트의 전체 개발 주기 동안 항상 프로파일링을 수행해야 하지만, 프로파일링에서 가장 중요한 이득은 초기 단계에서 시작할 때 발생합니다.

초기 단계에서 자주 프로파일링하여 당신과 팀이 벤치마킹에 사용할 수 있는 프로젝트의 "성능 서명"을 이해하고 기억하도록 하십시오. 성능이 급격히 떨어지면 문제가 발생한 시점을 쉽게 파악하고 문제를 해결할 수 있습니다.

에디터에서 프로파일링하면 주요 문제를 식별하는 쉬운 방법을 제공하지만, 가장 정확한 프로파일링 결과는 항상 대상 장치에서 빌드를 실행하고 프로파일링할 때 나오며, 각 플랫폼의 하드웨어 특성을 파고들기 위해 플랫폼별 도구를 활용해야 합니다. 이 조합은 모든 대상 장치에서 애플리케이션 성능에 대한 전체적인 관점을 제공합니다. 예를 들어, 일부 모바일 장치에서는 GPU에 바인딩되어 있지만 다른 장치에서는 CPU에 바인딩될 수 있으며, 이는 해당 장치에서 측정해야만 알 수 있습니다.

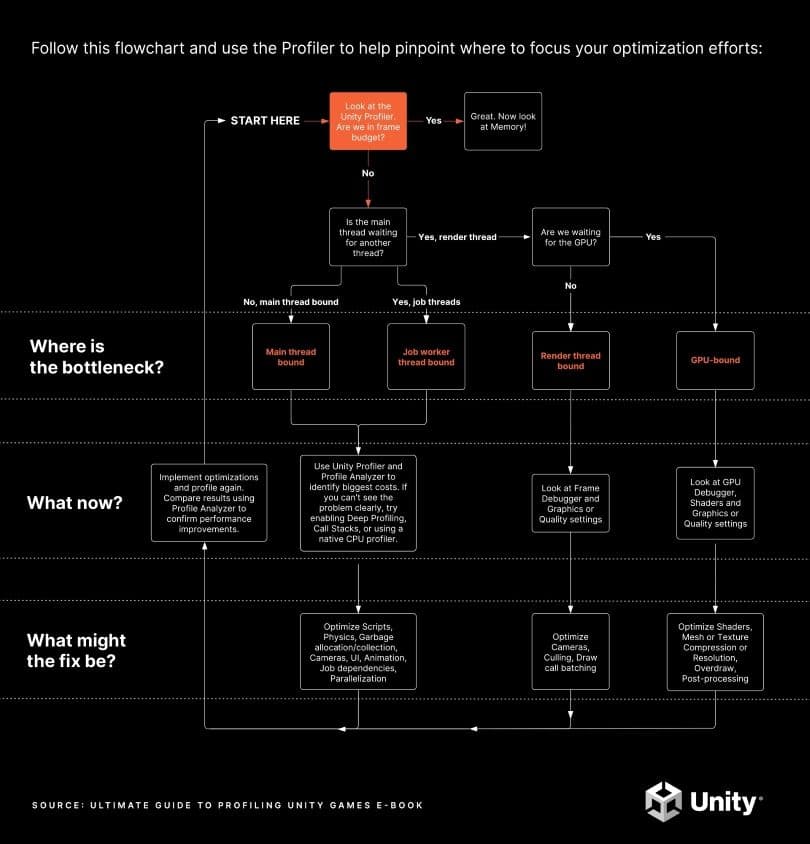

이 차트의 인쇄 가능한 PDF 버전을 여기에서 다운로드하십시오.

프로파일링의 목적은 최적화의 목표로 병목 현상을 식별하는 것입니다. 추측에 의존하면 병목 현상이 아닌 게임의 일부를 최적화하게 되어 전체 성능에 거의 또는 전혀 개선이 이루어지지 않을 수 있습니다. 일부 "최적화"는 게임의 전체 성능을 악화시킬 수 있으며, 다른 것들은 노동 집약적일 수 있지만 미미한 결과를 가져올 수 있습니다. 핵심은 집중된 시간 투자의 영향을 최적화하는 것입니다.

위의 흐름도는 초기 프로파일링 프로세스를 설명하며, 그 뒤의 섹션은 각 단계에 대한 자세한 정보를 제공합니다. 그들은 또한 실제 Unity 프로젝트에서 Profiler 캡처를 제시하여 찾아야 할 것들의 종류를 설명합니다.

GPU를 기다리는 동안을 포함하여 모든 CPU 활동에 대한 전체적인 그림을 얻으려면 Profiler의 타임라인 보기를 사용하십시오. CPU 모듈에서. 캡처를 올바르게 해석하기 위해 일반 Profiler 마커에 익숙해지십시오. 일부 Profiler 마커는 대상 플랫폼에 따라 다르게 나타날 수 있으므로, 각 대상 플랫폼에서 게임의 캡처를 탐색하는 데 시간을 할애하여 프로젝트에 대한 "정상" 캡처가 어떻게 보이는지 파악하십시오.

프로젝트의 성능은 가장 긴 시간을 소요하는 칩 및/또는 스레드에 의해 제한됩니다. 최적화 노력이 집중되어야 하는 영역입니다. 예를 들어, VSync가 활성화된 33.33 ms의 목표 프레임 시간 예산을 가진 게임의 다음 시나리오를 상상해 보십시오:

CPU 또는 GPU에 의해 제한되는 것을 더 탐구하는 이러한 리소스를 참조하십시오:

개발 초기부터 자주 프로파일링하고 프로젝트를 최적화하면 애플리케이션의 모든 CPU 스레드와 전체 GPU 프레임 시간이 프레임 예산 내에 있도록 보장하는 데 도움이 됩니다. 이 과정을 안내할 질문은, 당신이 프레임 예산 내에 있는가 아닌가입니다?

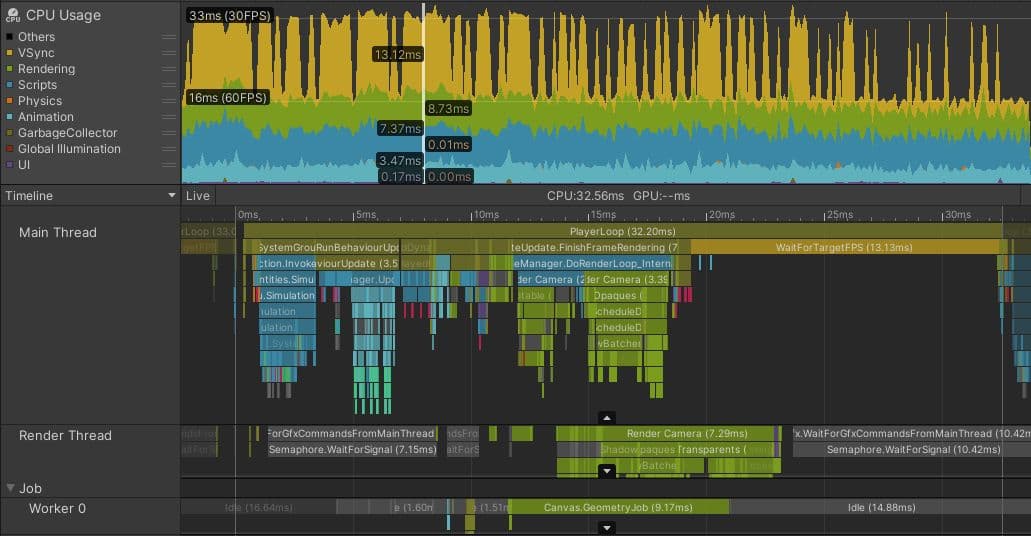

위 이미지는 지속적인 프로파일링과 최적화를 수행한 팀이 개발한 Unity 모바일 게임의 프로파일 캡처입니다. 이 게임은 고사양 모바일 전화에서 60 fps, 중/저사양 전화에서 30 fps를 목표로 하며, 이 캡처의 전화도 포함됩니다.

선택된 프레임에서 거의 절반의 시간이 노란색 WaitForTargetFPS Profiler 마커에 의해 차지되는 것을 주목하십시오. 애플리케이션은 Application.targetFrameRate를 30 fps로 설정하였고, VSync가 활성화되어 있습니다. 메인 스레드에서의 실제 처리 작업은 약 19 ms 지점에서 완료되며, 나머지 시간은 다음 프레임을 시작하기 전에 33.33 ms가 경과하기를 기다리는 데 사용됩니다. 이 시간은 프로파일러 마커로 표시되지만, 이 시간 동안 주요 CPU 스레드는 본질적으로 유휴 상태이며, CPU가 냉각되고 최소한의 배터리 전력을 사용할 수 있도록 합니다.

다른 플랫폼에서 또는 VSync가 비활성화된 경우 주의해야 할 마커는 다를 수 있습니다. 중요한 것은 주요 스레드가 프레임 예산 내에서 실행되고 있는지, 또는 VSync를 기다리고 있는 애플리케이션을 나타내는 어떤 종류의 마커와 함께 정확히 프레임 예산에 있는지를 확인하는 것입니다. 다른 스레드에 유휴 시간이 있는지도 확인해야 합니다.

유휴 시간은 회색 또는 노란색 프로파일러 마커로 표시됩니다. 위의 스크린샷은 렌더 스레드가 Gfx.WaitForGfxCommandsFromMainThread에서 유휴 상태임을 보여주며, 이는 한 프레임에서 GPU에 드로우 호출을 보내는 작업을 마친 후 CPU로부터 다음 드로우 호출 요청을 기다리는 시간을 나타냅니다. 유사하게, Job Worker 0 스레드가 Canvas.GeometryJob에서 일부 시간을 보내지만, 대부분의 시간은 유휴 상태입니다. 이 모든 것은 프레임 예산 내에서 원활하게 작동하는 애플리케이션의 징후입니다.

게임이 프레임 예산 내에 있다면

프레임 예산 내에 있다면, 배터리 사용량 및 열 제한을 고려하여 예산에 대한 조정이 포함된 경우, 주요 프로파일링 작업이 완료된 것입니다. 애플리케이션이 메모리 예산 내에 있는지 확인하기 위해 Memory Profiler를 실행하여 결론을 내릴 수 있습니다.

위 이미지는 30fps에 필요한 ~22ms 프레임 예산 내에서 원활하게 작동하는 게임을 보여줍니다. VSync까지의 주요 스레드 시간을 패딩하는 WaitForTargetfps와 렌더 스레드 및 작업자 스레드의 회색 유휴 시간을 주목하십시오. 또한 VBlank 간격은 Gfx.Present의 프레임 끝 시간을 살펴보면 관찰할 수 있으며, 이러한 것들 사이의 시간을 측정하기 위해 타임라인 영역이나 상단의 시간 눈금에서 시간 척도를 그릴 수 있습니다.

게임이 CPU 프레임 예산 내에 있지 않다면, 다음 단계는 CPU의 어떤 부분이 병목 현상인지 조사하는 것입니다. 즉, 어떤 스레드가 가장 바쁜지 확인하는 것입니다.

전체 CPU 작업량이 병목 현상이 되는 것은 드뭅니다. 현대 CPU는 독립적으로 동시에 작업을 수행할 수 있는 여러 개의 코어를 가지고 있습니다. 각 CPU 코어에서 서로 다른 스레드가 실행될 수 있습니다. 전체 Unity 애플리케이션은 다양한 목적을 위해 여러 스레드를 사용하지만, 성능 문제를 찾는 데 가장 일반적인 스레드는 다음과 같습니다.

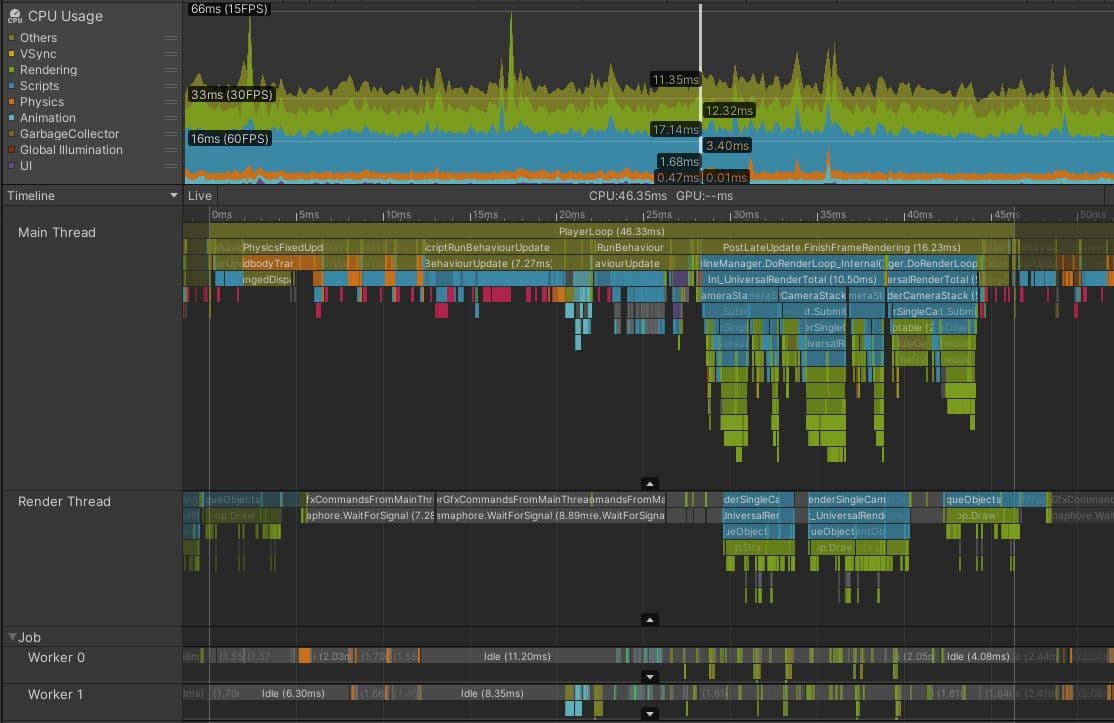

메인 스레드 최적화의 실제 예

아래 이미지는 메인 스레드에 의해 제한된 프로젝트에서의 모습이 어떻게 보일 수 있는지를 보여줍니다. 이 프로젝트는 Meta Quest 2에서 실행되고 있으며, 일반적으로 13.88 ms(72 fps) 또는 8.33 ms(120 fps)의 프레임 예산을 목표로 합니다. 이는 VR 장치에서 모션 병을 피하기 위해 높은 프레임 속도가 중요하기 때문입니다. 그러나 이 게임이 30 fps를 목표로 하더라도, 이 프로젝트가 문제가 있다는 것은 분명합니다.

렌더 스레드와 작업자 스레드가 프레임 예산 내의 예제와 유사하게 보이지만, 메인 스레드는 전체 프레임 동안 작업으로 바쁜 것이 분명합니다. 프레임 끝에서의 소량의 프로파일러 오버헤드를 고려하더라도, 메인 스레드는 45 ms 이상 바쁘며, 이는 이 프로젝트가 22 fps 미만의 프레임 속도를 달성한다는 것을 의미합니다. 메인 스레드가 VSync를 기다리고 있다는 것을 보여주는 마커는 없으며, 전체 프레임 동안 바쁩니다.

조사의 다음 단계는 프레임에서 가장 오랜 시간이 걸리는 부분을 식별하고 그 이유를 이해하는 것입니다. 이 프레임에서 PostLateUpdate.FinishFrameRendering은 16.23 ms를 소요하며, 전체 프레임 예산보다 많습니다. 자세히 살펴보면 Inl_RenderCameraStack라는 마커가 다섯 번 나타나며, 이는 다섯 개의 카메라가 활성화되어 장면을 렌더링하고 있음을 나타냅니다. 모든 카메라가 Unity에서 전체 렌더 파이프라인을 호출하므로, 활성 카메라 수를 줄이는 것이 이 프로젝트의 가장 높은 우선 순위 작업입니다. 이상적으로는 단 하나로 줄이는 것입니다.

BehaviourUpdate, 모든 MonoBehaviour.Update() 메서드 실행을 포함하는 Profiler 마커는 이 프레임에서 7.27 밀리초가 소요됩니다.

타임라인 뷰에서 마젠타 색상의 섹션은 스크립트가 관리 힙 메모리를 할당하는 지점을 나타냅니다. 계층 뷰로 전환하고 검색창에 GC.Alloc를 입력하여 필터링하면, 이 메모리를 할당하는 데 약 0.33ms가 소요됨을 보여줍니다. 그러나 이는 메모리 할당이 CPU 성능에 미치는 영향을 부정확하게 측정한 것입니다.

GC.Alloc 마커는 일반적인 Profiler 샘플처럼 시작 및 종료 지점을 기록하여 시간을 측정하지 않습니다. 오버헤드를 최소화하기 위해 Unity는 할당의 타임스탬프와 할당된 크기만 기록합니다.

Profiler는 GC.Alloc 마커에 작은 인위적인 샘플 지속 시간을 할당하여 Profiler 뷰에서 보이도록 합니다. 실제 할당은 더 오래 걸릴 수 있으며, 특히 시스템에서 새로운 메모리 범위를 요청해야 하는 경우에는 더욱 그렇습니다. 영향을 더 명확하게 보기 위해 할당을 수행하는 코드 주위에 Profiler 마커를 배치하십시오. 깊은 프로파일링에서는 타임라인 뷰의 마젠타 색상 GC.Alloc 샘플 사이의 간격이 이들이 얼마나 걸렸는지를 나타내는 일부 지표를 제공합니다.

또한, 새로운 메모리를 할당하는 것은 성능에 부정적인 영향을 미칠 수 있으며, 이를 측정하고 직접적으로 귀속시키는 것이 더 어렵습니다.

프레임 시작 시, 물리.FixedUpdate의 네 인스턴스가 4.57ms를 더합니다. 나중에 LateBehaviourUpdate (MonoBehaviour.LateUpdate() 호출)는 4ms가 소요되고, 애니메이터는 약 1ms를 차지합니다. 이 프로젝트가 원하는 프레임 예산과 비율에 도달하도록 보장하기 위해, 이러한 모든 메인 스레드 문제를 조사하여 적절한 최적화를 찾아야 합니다.

메인 스레드 병목 현상에 대한 일반적인 함정

가장 큰 성능 향상은 가장 오랜 시간이 걸리는 것들을 최적화함으로써 이루어질 것입니다. 다음 영역은 메인 스레드에 바인딩된 프로젝트에서 최적화를 찾기에 종종 유익한 장소입니다:

가장 일반적인 함정 최적화를 위한 실행 가능한 팁 목록을 제공하는 최적화 가이드를 읽어보세요:

조사하고자 하는 문제에 따라 다른 도구도 유용할 수 있습니다:

게임 최적화에 대한 포괄적인 팁을 보려면, 이 Unity 전문가 가이드를 무료로 다운로드하세요:

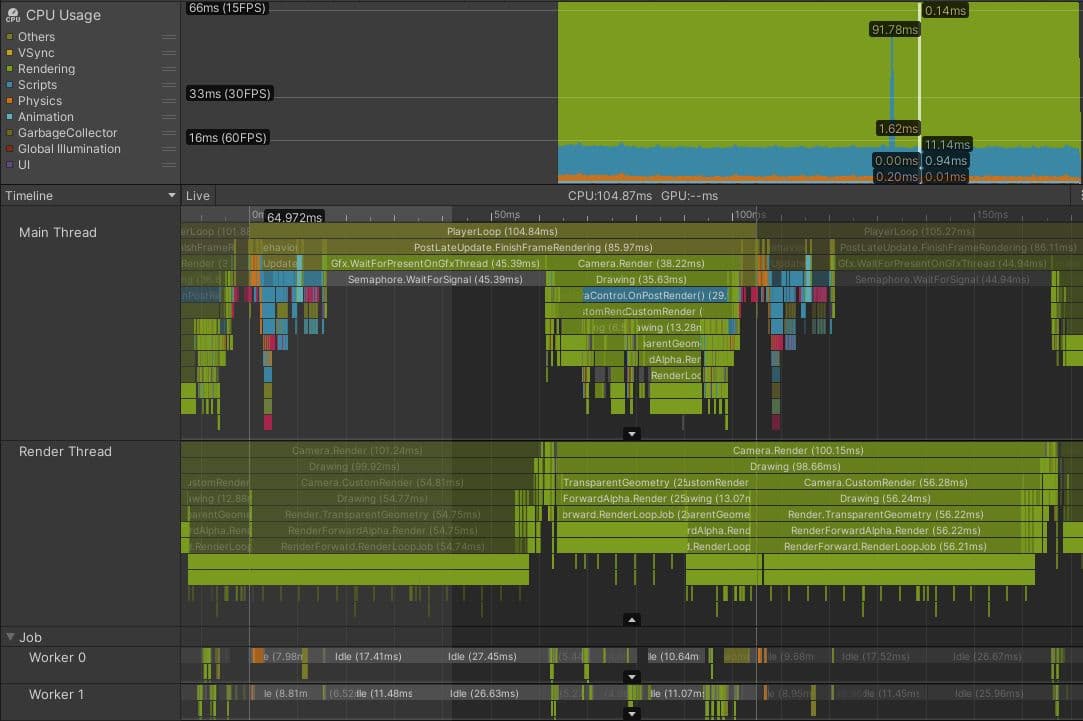

렌더 스레드에 바인딩된 실제 프로젝트입니다. 타겟 프레임 예산이 33.33 ms인 이소메트릭 뷰포인트의 콘솔 게임입니다.

Profiler 캡처는 현재 프레임의 렌더링이 시작되기 전에 메인 스레드가 렌더 스레드를 기다리고 있음을 보여줍니다. 이는 Gfx.WaitForPresentOnGfxThread 마커로 표시됩니다. 렌더 스레드는 여전히 이전 프레임의 드로우 콜 명령을 제출하고 있으며, 메인 스레드에서 새로운 드로우 콜을 수용할 준비가 되어 있지 않습니다. 또한 Camera.Render에서 시간을 소비하고 있습니다.

현재 프레임과 다른 프레임과 관련된 마커의 차이를 알 수 있습니다. 후자는 더 어둡게 나타납니다. 주 스레드가 계속 진행되고 렌더 스레드가 처리할 드로우 호출을 시작할 수 있게 되면, 렌더 스레드가 현재 프레임을 처리하는 데 100ms 이상 걸리며, 이는 다음 프레임에서 병목 현상을 초래합니다.

추가 조사를 통해 이 게임이 아홉 개의 서로 다른 카메라와 교체 셰이더로 인한 많은 추가 패스를 포함하는 복잡한 렌더링 설정을 가지고 있음을 알 수 있었습니다. 이 게임은 또한 포워드 렌더링 경로를 사용하여 130개 이상의 포인트 라이트를 렌더링하고 있으며, 이는 각 라이트에 대해 여러 개의 추가 투명 드로우 호출을 추가할 수 있습니다. 총합적으로 이러한 문제들이 결합되어 프레임당 3000개 이상의 드로우 호출을 생성했습니다.

렌더 스레드 병목 현상에 대한 일반적인 함정

다음은 렌더 스레드에 바인딩된 프로젝트에서 조사해야 할 일반적인 원인입니다:

렌더링 프로파일러 모듈은 매 프레임마다 드로우 호출 배치 수와 SetPass 호출 수에 대한 개요를 보여줍니다. 렌더 스레드가 GPU에 발행하는 드로우 호출 배치를 조사하는 데 가장 좋은 도구는 프레임 디버거입니다.

확인된 병목 현상을 해결하기 위한 도구

이 전자책의 초점은 성능 문제를 식별하는 것이지만, 이전에 강조한 두 개의 보완적인 성능 최적화 가이드는 대상 플랫폼이 PC 또는 콘솔인지 모바일인지에 따라 병목 현상을 해결하는 방법에 대한 제안을 제공합니다. 렌더 스레드 병목 현상의 맥락에서 Unity가 식별한 문제에 따라 다양한 배치 시스템과 옵션을 제공한다는 점을 강조할 가치가 있습니다. 여기에서 우리는 전자책에서 더 자세히 설명하는 몇 가지 옵션에 대한 간단한 개요를 제공합니다:

또한 CPU 측에서는 카메라.레이어 제거 거리와 같은 기술을 사용하여 카메라와의 거리 기반으로 객체를 제거하여 렌더 스레드로 전송되는 객체 수를 줄여 CPU 병목 현상을 완화할 수 있습니다.

이들은 사용 가능한 옵션 중 일부에 불과합니다. 각각은 서로 다른 장점과 단점을 가지고 있습니다. 일부는 특정 플랫폼에 제한됩니다. 프로젝트는 종종 이러한 시스템의 조합을 사용해야 하며, 이를 위해서는 최대한 활용하는 방법에 대한 이해가 필요합니다.

주 스레드나 렌더 스레드 외의 CPU 스레드에 의해 제한된 프로젝트는 그리 흔하지 않습니다. 그러나 프로젝트가 데이터 지향 기술 스택 (DOTS)을 사용하는 경우, 특히 작업이 메인 스레드에서 작업 스레드로 이동하는 경우 발생할 수 있습니다.

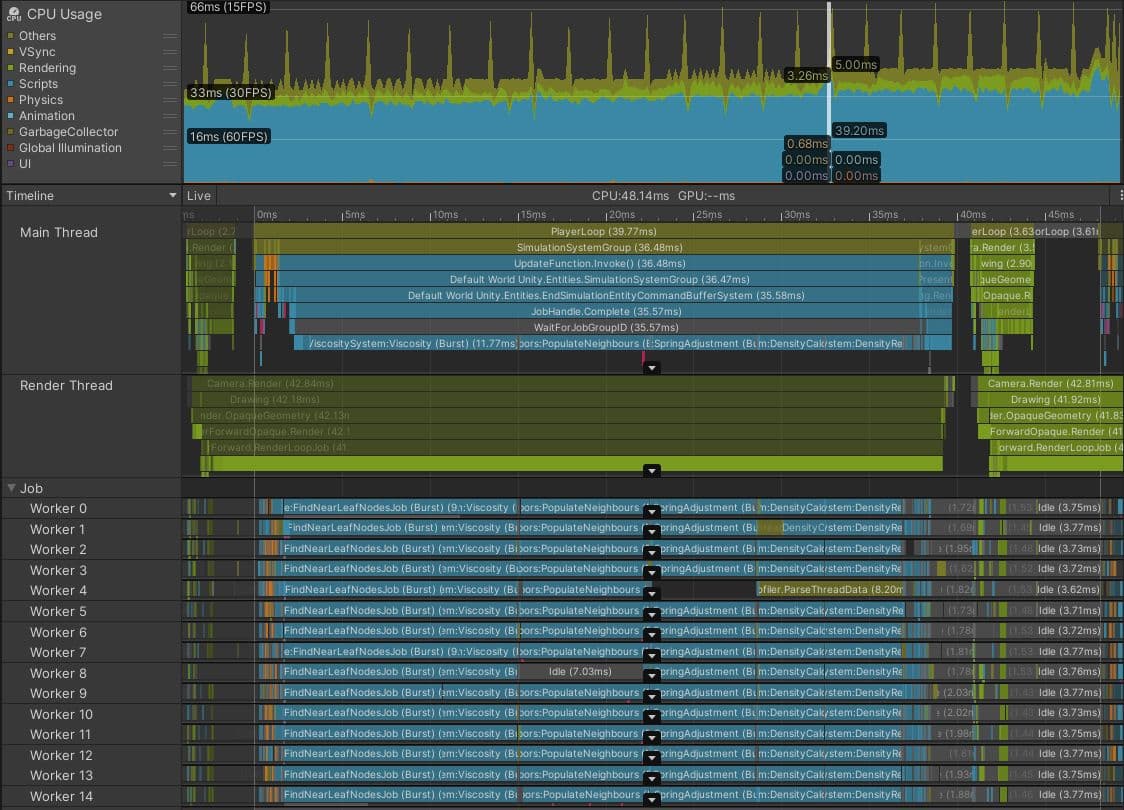

위 이미지는 에디터의 플레이 모드에서 캡처한 것으로, CPU에서 입자 유체 시뮬레이션을 실행하는 DOTS 프로젝트를 보여줍니다.

첫눈에 성공처럼 보입니다. 작업 스레드는 버스트 컴파일된 작업으로 빽빽하게 포장되어 있으며, 이는 많은 작업이 메인 스레드에서 이동했음을 나타냅니다. 보통 이는 합리적인 결정입니다.

그러나 이 경우, 48.14 ms의 프레임 시간과 메인 스레드의 35.57 ms의 회색 WaitForJobGroupID 마커는 모든 것이 잘 되지 않고 있음을 나타냅니다. WaitForJobGroupID는 메인 스레드가 작업 스레드에서 비동기적으로 실행할 작업을 예약했지만, 작업 스레드가 작업을 완료하기 전에 해당 작업의 결과가 필요함을 나타냅니다. WaitForJobGroupID 아래의 파란색 프로파일러 마커는 작업이 완료되기를 기다리는 동안 메인 스레드가 작업을 실행하고 있음을 보여줍니다.

작업이 버스트 컴파일되었지만 여전히 많은 작업을 수행하고 있습니다. 이 프로젝트에서 서로 가까운 입자를 빠르게 찾기 위해 사용되는 공간 쿼리 구조는 최적화되거나 더 효율적인 구조로 교체되어야 할 수 있습니다. 또는 공간 쿼리 작업을 시작이 아닌 프레임 끝에 예약할 수 있으며, 결과는 다음 프레임의 시작까지 필요하지 않습니다. 이 프로젝트는 너무 많은 입자를 시뮬레이션하려고 할 수 있습니다. 해결책을 찾기 위해 작업 코드에 대한 추가 분석이 필요하므로 더 세분화된 프로파일러 마커를 추가하면 가장 느린 부분을 식별하는 데 도움이 될 수 있습니다.

귀하의 프로젝트의 작업은 이 예제만큼 병렬화되지 않았을 수 있습니다. 아마도 단일 작업자가 실행 중인 긴 작업이 하나만 있을 수 있습니다. 작업이 예약된 시점과 완료되어야 하는 시점 사이의 시간이 작업이 실행되기에 충분히 길다면 괜찮습니다. 그렇지 않으면, 위의 스크린샷처럼 작업이 완료되기를 기다리는 동안 메인 스레드가 멈추는 것을 볼 수 있습니다.

작업자 스레드 병목 현상에 대한 일반적인 함정

동기화 지점 및 작업자 스레드 병목 현상의 일반적인 원인은 다음과 같습니다:

CPU 사용 프로파일러 모듈의 타임라인 뷰에서 Flow Events 기능을 사용하여 작업이 예약되는 시점과 메인 스레드에서 결과가 예상되는 시점을 조사할 수 있습니다.

효율적인 DOTS 코드를 작성하는 방법에 대한 자세한 내용은 DOTS 모범 사례 가이드를 참조하십시오.

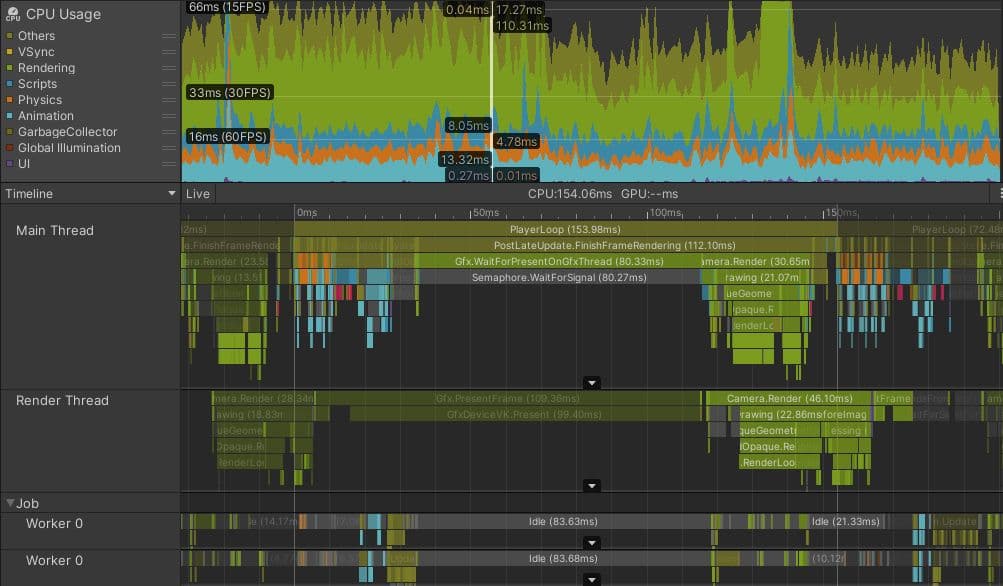

귀하의 애플리케이션은 메인 스레드가 Gfx.WaitForPresentOnGfxThread와 같은 프로파일러 마커에서 많은 시간을 보내고, 렌더 스레드가 동시에 Gfx.PresentFrame 또는 .WaitForLastPresent.와 같은 마커를 표시하는 경우 GPU에 의해 제한됩니다.

GPU 프레임 시간을 얻는 가장 좋은 방법은 대상 플랫폼별 GPU 프로파일링 도구를 사용하는 것이지만, 모든 장치가 신뢰할 수 있는 데이터를 캡처하기 쉽게 만들지는 않습니다.

FrameTimingManager API는 이러한 경우에 유용할 수 있으며, CPU와 GPU 모두에서 낮은 오버헤드의 고수준 프레임 시간을 제공합니다.

위 캡처는 Vulkan 그래픽 API를 사용하는 Android 모바일 전화에서 촬영되었습니다. 이 예제에서 Gfx.PresentFrame에서 소요된 시간 중 일부는 VSync 대기와 관련이 있을 수 있지만, 이 Profiler 마커의 극단적인 길이는 이 시간의 대부분이 GPU가 이전 프레임 렌더링을 완료하기를 기다리는 데 소요된다는 것을 나타냅니다.

이 게임에서는 특정 게임 플레이 이벤트가 GPU가 렌더링한 드로우 호출 수를 세 배로 늘리는 셰이더의 사용을 촉발했습니다. GPU 성능 프로파일링 시 조사해야 할 일반적인 문제는 다음과 같습니다:

응용 프로그램이 GPU에 바인딩된 것처럼 보이는 경우, GPU에 전송되는 드로우 콜 배치를 이해하는 빠른 방법으로 프레임 디버거를 사용할 수 있습니다. 그러나 이 도구는 특정 GPU 타이밍 정보를 제공할 수 없으며, 전체 장면이 어떻게 구성되는지만 보여줄 수 있습니다.

GPU 병목 현상의 원인을 조사하는 가장 좋은 방법은 적절한 GPU 프로파일러에서 GPU 캡처를 검사하는 것입니다. 어떤 도구를 사용할지는 대상 하드웨어와 선택한 그래픽 API에 따라 다릅니다. 자세한 내용은 전자책의 프로파일링 및 디버깅 도구 섹션을 참조하십시오.

Unity 베스트 프랙티스 허브에서 더 많은 베스트 프랙티스와 팁을 찾아보세요. 산업 전문가, Unity 엔지니어 및 기술 아티스트가 만든 30개 이상의 가이드 중에서 선택하여 Unity의 도구 세트와 시스템을 효율적으로 개발하는 데 도움을 받을 수 있습니다.