团结出版物

2022

实用实时六角平铺

Morten S. Mikkelsen - 计算机图形技术期刊 (JCGT)

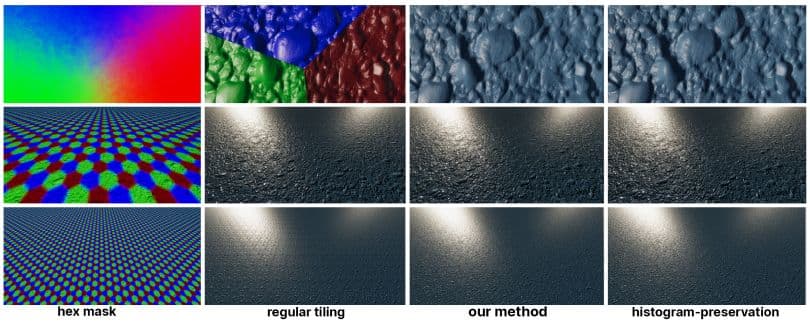

为了给实时图形中的随机平铺纹理提供一种方便易用的方法,我们提出了对 Heitz 和 Neyret 的逐例噪声算法进行改编的方案。原始方法使用直方图保留法来保留对比度,该方法需要一个预计算步骤,将源纹理转换为变换和反变换纹理,这两种纹理都必须在着色器中采样,而不是原始源纹理。因此,要想让着色器和材质的作者感觉不透明,就必须与应用程序深度集成。在适应过程中,我们省略了直方图保存,代之以一种新颖的混合方法,这种方法允许我们对原始源纹理进行采样。对于法线贴图来说,这种省略尤其合理,因为它代表了高度贴图的部分导数。为了分散六角形地砖之间的过渡,我们引入了一个简单的指标来调整混合权重。对于色彩纹理,我们通过直接对混合权重应用对比度函数来减少对比度损失。虽然我们的方法适用于颜色,但我们在工作中强调法线贴图的使用情况,因为非重复性噪声是通过扰动法线来模拟表面细节的理想选择。

ProtoRes:人体姿态深度建模的原生架构

Boris N. Oreshkin、Florent Bocquelet、Félix G. Harvey、Bay Raitt、Dominic Laflamme - ICLR 2022(口头报告,录用论文的前 5%)。

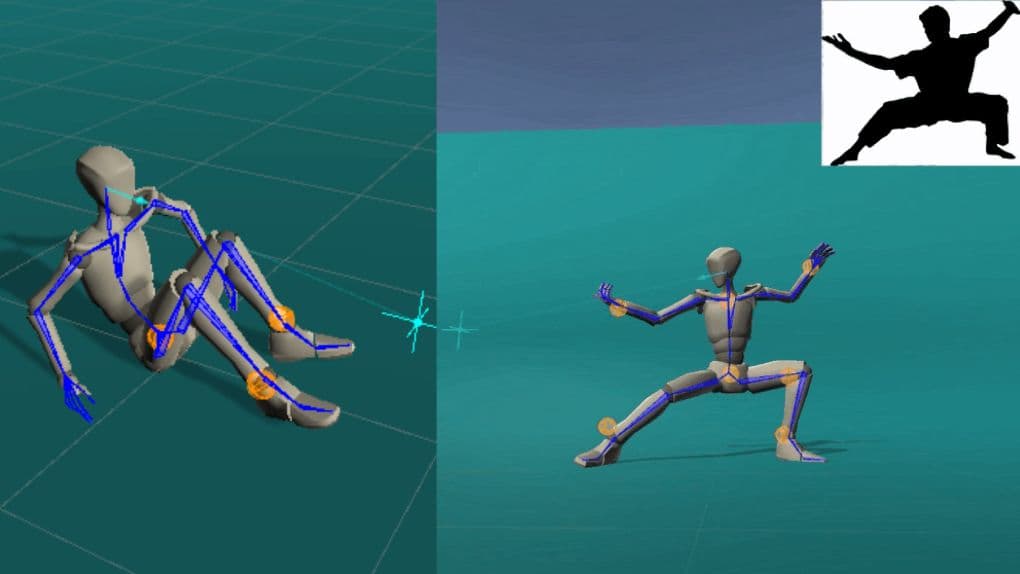

我们的工作重点是为高级人工智能辅助动画工具开发可学习的人体姿势神经表征。具体来说,我们要解决的问题是,根据稀疏且可变的用户输入(如身体关节子集的位置和/或方向)构建完整的静态人体姿势。为了解决这个问题,我们提出了一种新颖的神经架构,它将残差连接与部分指定姿势的原型编码相结合,从学习到的潜空间中创建一个新的完整姿势。我们的研究表明,我们的架构在准确性和计算效率方面都优于基于 Transformer 的基线架构。此外,我们还开发了一个用户界面,将我们的神经模型集成到实时三维开发平台 Unity 中。此外,我们还基于高质量的人体运动捕捉数据,推出了两个代表静态人体姿态建模问题的新数据集,这两个数据集将与模型代码一起公开发布。

Htex:针对任意网格拓扑的每半边纹理设计

Wilhem Barbier、Jonathan Dupuy - HPG 2022

我们介绍了一种无需明确参数化就能对任意多边形网格进行纹理加工的 GPU 友好型方法--每半边纹理加工(Htex)。Htex 建立在半边边编码多边形网格内在三角剖分的基础上,其中每个半边边都跨越一个具有直接邻接信息的独特三角形。Htex 并不像以前的无参数化纹理制作方法那样,为输入网格的每个面存储单独的纹理,而是为每个半边及其孪生面存储一个正方形纹理。我们证明,从面到半边的这一简单变化可为高性能无参数纹理生成带来两个重要特性。首先,Htex 本机支持任意多边形,无需为非四边形面等专门编写代码。其次,Htex 可以在 GPU 上直接高效地实现,每个半边只需进行三次纹理撷取,就能在整个网格上生成连续的纹理。我们通过实时渲染生产资产来证明 Htex 的有效性。

数据驱动的预计算辐射度传输范例

Laurent Belcour, Thomas Deliot, Wilhem Barbier, Cyril Soler - HPG 2022

在这项工作中,我们探索改变范式,以数据驱动的方式建立预计算辐射转移(PRT)方法。这种模式的转变使我们能够减轻构建传统 PRT 方法的困难,如定义重建基础、编码专用路径跟踪器以计算传递函数等。我们的目标是通过提供一种简单的基准算法,为机器学习方法铺平道路。更具体地说,我们演示了通过对直接光照的少量测量,实时呈现头发和表面的间接光照。我们仅使用奇异值分解(SVD)等标准工具,从直接和间接照明渲染图中提取重建基础和传递函数,从而建立基线。

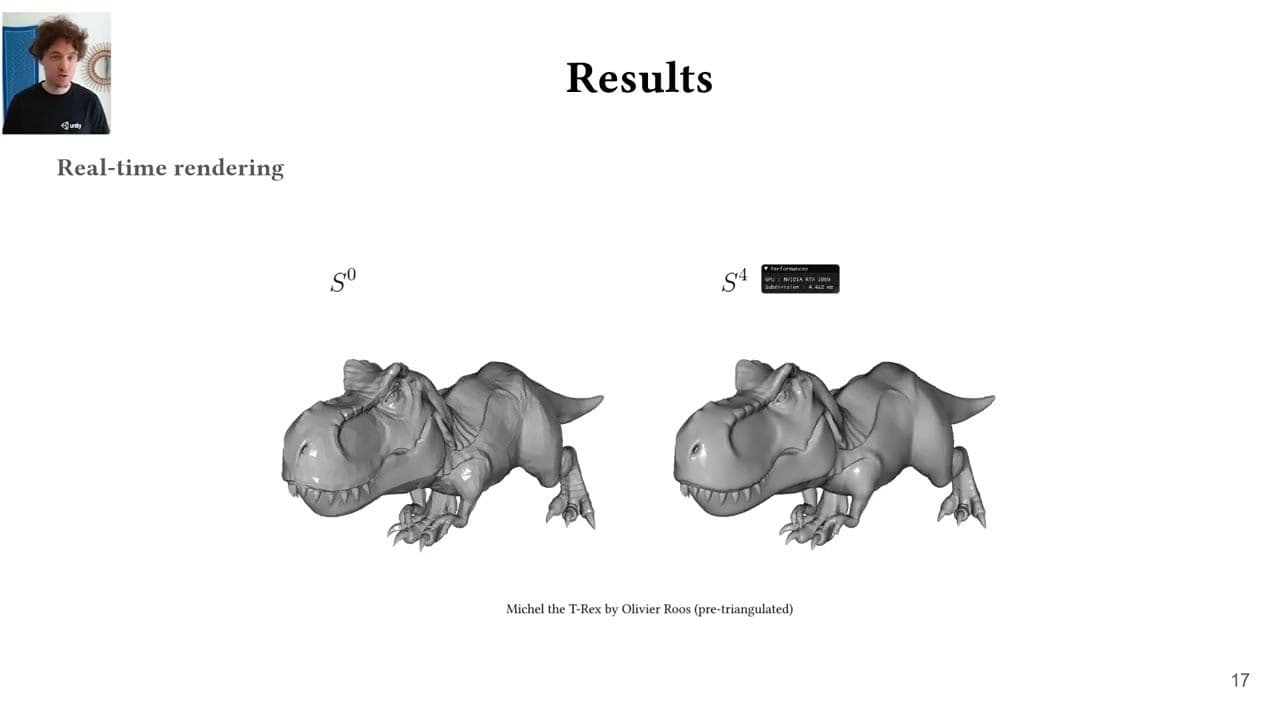

并行循环细分的半边细化规则

Laurent Belcour, Thomas Deliot, Wilhem Barbier, Cyril Soler - HPG 2022

在这项工作中,我们探索改变范式,以数据驱动的方式建立预计算辐射转移(PRT)方法。这种模式的转变使我们能够减轻构建传统 PRT 方法的困难,如定义重建基础、编码专用路径跟踪器以计算传递函数等。我们的目标是通过提供一种简单的基准算法,为机器学习方法铺平道路。更具体地说,我们演示了通过对直接光照的少量测量,实时呈现头发和表面的间接光照。我们仅使用奇异值分解(SVD)等标准工具,从直接和间接照明渲染图中提取重建基础和传递函数,从而建立基线。

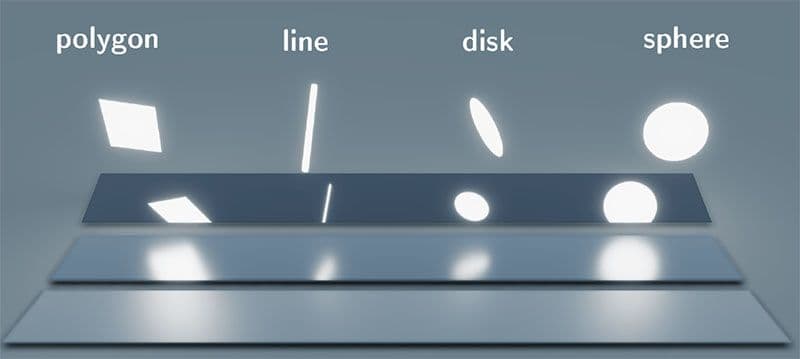

将线性变换余弦引入各向异性 GGX

Aakash KT、Eric Heitz、Jonathan Dupuy、P.J.Narayanan - I3D 2022

线性变换余弦(LTC)是一系列分布,由于其分析整合特性,可用于实时区域光阴影。现代游戏引擎使用 LTC 近似无处不在的 GGX 模型,但目前这种近似只适用于各向同性的 GGX,因此不支持各向异性的 GGX。虽然高维度本身就是一个挑战,但我们发现,在各向异性的情况下,拟合、后处理、存储和插值 LTC 时还会出现其他一些问题。每个操作都必须小心谨慎,以避免出现渲染伪影。我们通过引入和利用 LTC 的不变量特性,为每种操作找到稳健的解决方案。因此,我们得到了一个小型 8^4 查找表,它为各向异性 GGX 提供了一个可信且无伪影的 LTC 近似值,并将其用于实时区域光着色。

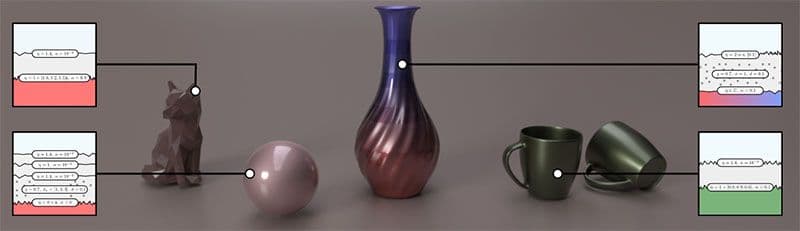

使用漫反射界面渲染分层材料

Heloise de Dinechin, Laurent Belcour - I3D 2022

在这项工作中,我们介绍了一种实时渲染带有粗糙电介质涂层的朗伯表面的新方法。我们的研究表明,这种配置的外观可以用两个微面叶片来忠实地表示,这两个微面叶片分别代表直接和间接的相互作用。我们根据光传输的一阶方向统计(能量、均值和方差),使用 5D 表对这些裂片进行数值拟合,并通过解析形式和降维将其缩小为 2D + 1D。我们通过有效渲染粗糙的塑料和陶瓷,展示了我们方法的质量,并与地面实况非常接近。此外,我们还改进了最先进的层状材料模型,使其包括朗伯界面。

29%

FC-GAGA:用于时空交通预测的全连接门控图架构

Boris N. Oreshkin、Arezou Amini、Lucy Coyle、Mark J. Coates (AAAI 2021)

多变量时间序列的预测是一个重要问题,在交通管理、蜂窝网络配置和定量金融方面都有应用。当有一张能反映时间序列之间关系的图表时,问题就会出现一个特例。在本文中,我们提出了一种新颖的学习架构,其性能可与现有最佳算法媲美,甚至更胜一筹,而且无需了解图形知识。我们提出的架构的关键要素是可学习的全连接硬图门控机制,它使最先进、计算效率极高的全连接时间序列预测架构得以在交通预测应用中使用。两个公共交通网络数据集的实验结果表明了我们方法的价值,而消融研究则证实了架构中每个元素的重要性。

用于神经纹理合成的瓦瑟斯坦切片损失

Eric Heitz、Kenneth Vanhoey、Thomas Chambon、Laurent Belcour - 将发表于《CVPR 2021》。

我们要解决的问题是,根据从针对物体识别而优化的卷积神经网络(如 VGG-19)的特征激活中提取的统计数据计算纹理损失。基本数学问题是特征空间中两个分布之间的距离测量。格兰矩阵损耗是解决这一问题的常用近似方法,但它也存在一些缺陷。我们的目标是推广 "切片瓦瑟施泰因距离",以取代它。它在理论上经过验证,实用性强,实施简单,通过优化或训练生成式神经网络,可获得视觉效果优于纹理合成的结果。

使用光线锥提高着色器和纹理的精细度

Tomas Akenine-Möller、Cyril Crassin、Jakub Boksansky、Laurent Belcour、Alexey Panteleev、Oli Wright - 发表于《计算机图形技术期刊》(JCGT)

在实时光线追踪中,纹理过滤是提高图像质量的一项重要技术。当前的游戏,如 Windows 10 上使用RTX 的 Minecraft,使用光线锥来确定纹理过滤足迹。在本文中,我们介绍了对光线锥算法的几项改进,这些改进提高了图像质量和性能,并使其更易于在游戏引擎中采用。我们的研究表明,在基于 GPU 的路径追踪器中,每帧的总时间可减少约 10%,我们还提供了一个公共领域的实现方案。

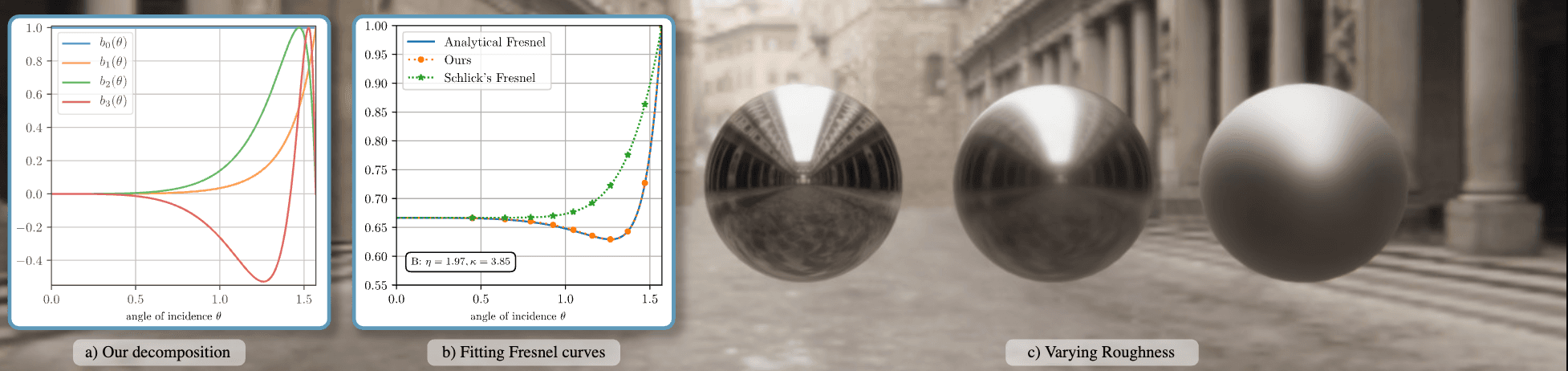

将精确菲涅尔引入实时渲染:可预积分分解

Laurent Belcour、Megane Bati、Pascal Barla - 发表于《ACM SIGGRAPH 2020 演讲与课程》。

我们介绍了一种新的近似菲涅尔反射模型,它能在实时渲染引擎中准确再现地面真实反射率。我们的方法基于对可能的菲涅尔曲线空间的经验分解。它与实时引擎中使用的基于图像的照明和区域照明的预集成兼容。我们的工作允许使用之前仅限于离线渲染的反射参数化 [Gulbrandsen 2014]。

并行二叉树

乔纳森-杜普伊 - HPG 2020

我们引入了并发二叉树(CBT),这是一种新颖的并发表示法,可并行构建和更新任意二叉树。从根本上说,我们的表示法由二进制堆(即一维数组)组成,其中明确存储了位场的和还原树。在这个比特字段中,每个一值比特代表 CBT 所编码的二叉树的一个叶节点,我们通过对和还原进行二值搜索的算法来定位这个叶节点。我们的研究表明,通过这种结构,每个叶节点只需调度一个线程,反过来,这些线程可以通过对位场进行简单的位操作,安全地同时拆分和/或删除节点。CBT 的实际优势在于它能利用并行处理器加速基于二叉树的算法。为了支持这一说法,我们利用我们的表示法来加速基于最长边等分线的算法,该算法完全在 GPU 上计算和渲染大规模地形的自适应几何图形。对于这种特定算法,CBT 的处理速度与处理器数量呈线性关系。

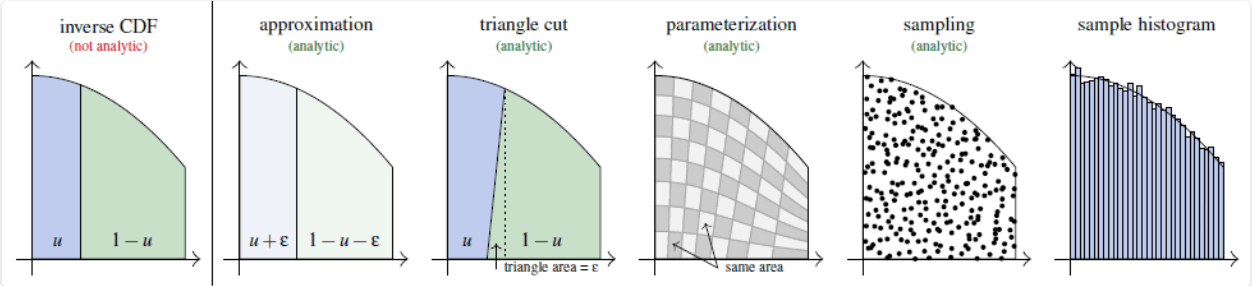

不能反转 CDF?曲线下区域的三角切分参数化

Eric Heitz - EGSR 2020

我们提出了一种精确的、可分析的确定性方法,用于对累积分布函数(CDF)无法反演的密度进行采样。事实上,逆 CDF 方法通常被认为是非均匀密度采样的最佳方法。如果 CDF 不能解析反演,典型的后备解决方案要么是近似的、数值的,要么是非确定的,如接受-拒绝。为了解决这个问题,我们展示了如何计算目标密度曲线下区域的保面积解析参数。我们用它来生成在目标密度曲线下均匀分布的随机点,因此它们的缺省值与目标密度分布一致。从技术上讲,我们的想法是使用一种近似的分析参数化方法,其误差可以用一个三角形的几何形状来表示,而这个三角形很容易剪切。这种三角剖分参数化方法可为采样问题提供精确的解析解,而这些问题原本是无法解析解决的。

渲染具有各向异性界面的分层材料

Philippe Weier、Laurent Belcour - 发表于《计算机图形技术期刊》(JCGT)

我们提出了一种轻便高效的方法,用于渲染具有各向异性界面的分层材料。我们的工作扩展了之前发布的统计框架,以处理各向异性的微面模型。我们工作的一个重要启示是,当投影到切线平面上时,各向异性 GGX 分布的 BRDF 叶片可以很好地用与切线框架对齐的椭圆形分布来近似:其协方差矩阵在该空间中是对角的。我们利用这一特性,在每个各向异性轴上独立执行各向同性分层算法。我们进一步更新了粗糙度与方向方差的映射,以及平均反射率的评估,以考虑各向异性。

任意多边形域内双变量投影-考奇分布的积分与模拟

乔纳森-杜普伊、洛朗-贝尔库尔和埃里克-海兹 - 2019 年技术报告

考虑维数为 d 的单位上半球上的均匀变分。众所周知,通过单位球面中心向其上方平面的直线投影会根据 d 维投影-考奇分布来分布这个变量。在这项工作中,我们利用这一结构在 d=2 维度上的几何特性,推导出了双变量投影-考基分布的新特性。具体来说,我们通过几何直观揭示了在任意域内积分和模拟二元投影-考奇分布分别转化为测量和采样从单位球原点看该几何域所占的实角。为了使这一结果适用于生成二元投影-考奇分布的截断变体等,我们从两个方面对其进行了扩展。首先,我们对以位置尺度相关系数为参数的考奇分布进行了概括。其次,我们对多边形域进行了特殊化,从而得出了闭式表达式。我们为三角形域的情况提供了完整的 MATLAB 实现,并简要讨论了椭圆域的情况以及如何将我们的结果进一步扩展到二元 Student 分布。

基于表面渐变的凹凸贴图框架

莫滕-米克尔森 2020

本文提出了一种新的框架来分层/合成凹凸/法线贴图,包括支持多组纹理坐标以及程序生成的纹理坐标和几何体。此外,我们还为在体积上定义的凹凸贴图(如贴花投影器、三平面投影和基于噪声的函数)提供适当的支持和集成。

以G缓冲区信息为指导的实时视频游戏多风格化

Adèle Saint-Denis、Kenneth Vanhoey、Thomas Deliot HPG 2019

我们研究如何利用现代神经风格迁移技术在运行时修改视频游戏的风格。最近的风格迁移神经网络是预训练的,可以在运行时进行任何风格的快速风格迁移。但是,一种风格会全局应用于整个图像,而我们希望为用户提供更精细的创作工具。在这项工作中,我们允许用户(通过风格图像)将风格分配给延迟渲染管线的G缓冲区中的各种物理量,如深度、法线或对象 ID。然后,我们的算法根据要渲染的场景平滑地插入这些风格:例如,针对不同的对象、深度或方向使用不同的风格。

29%

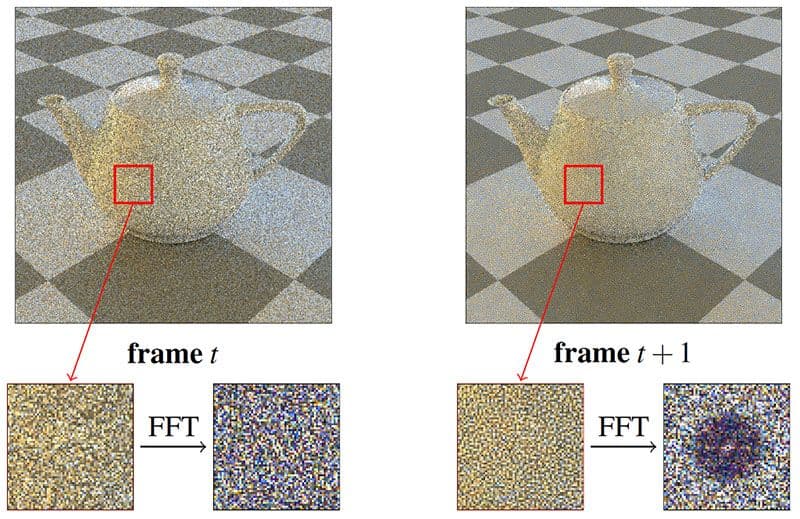

通过交换帧之间的像素种子,将蒙特卡洛误差以蓝噪声的形式分布在屏幕空间内

埃里克-海茨、洛朗-贝尔库尔 - EGSR EGSR 2019

我们会引入一种可生成每像素样本的采样器,得益于两种关键属性与其产生的蒙特卡洛误差有关,因而能实现高视觉质量。首先,每个像素的序列均为Owen-scrambled Sobol序列,该序列具有先进的收敛属性。因此,蒙特卡洛误差的绝对值很低。其次,这些误差以蓝噪声的形式分布于屏幕空间内。这会让它们具有更好的视觉接受性。我们的采样器轻质且快速。我们是通过一个小纹理和两个异或操作来实现它的。我们的补充材料会提供当前工作和之前工作在不同场景和样本计数情况下取得的成果比较。

将蒙特卡罗误差作为蓝噪分布于屏幕空间的低差异采样器

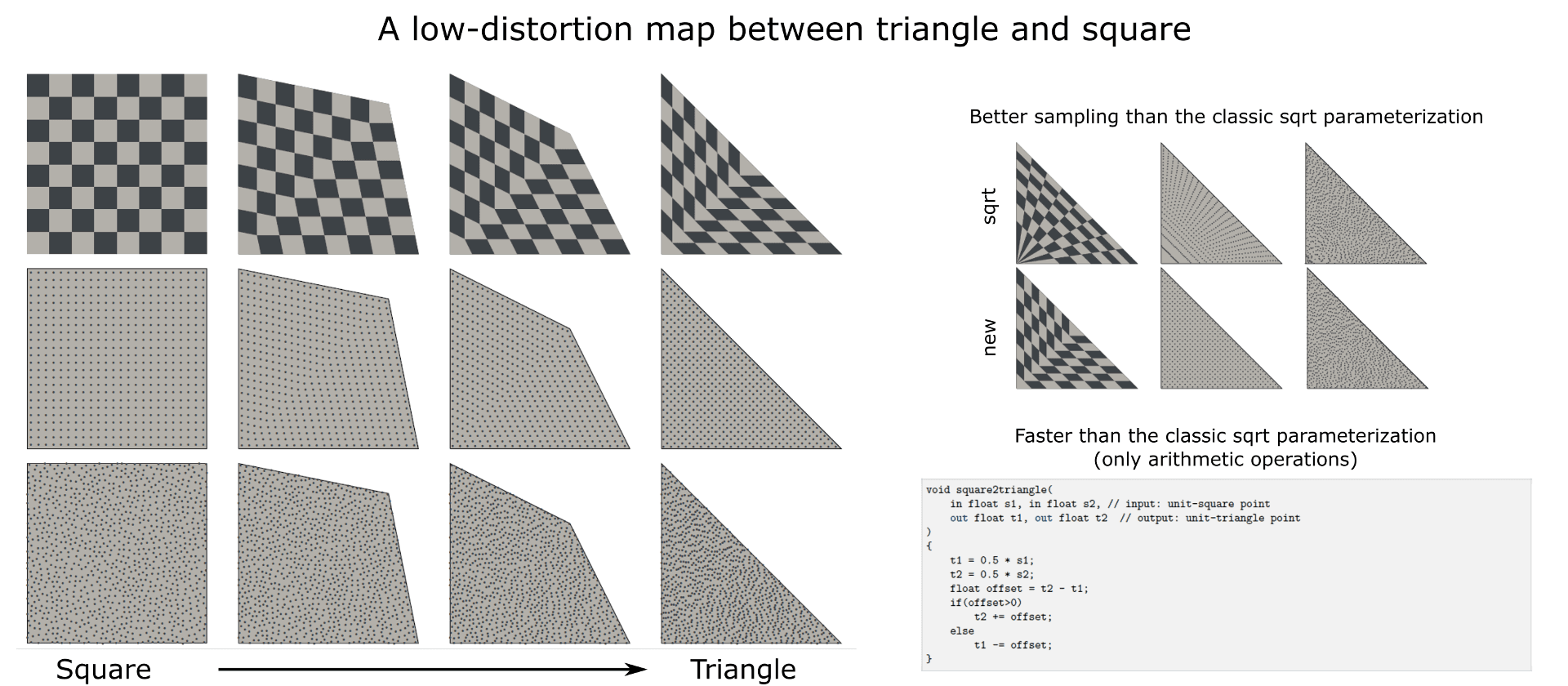

三角形与正方形之间的低失真地图

埃里克-海茨 - 2019 年技术报告

我们引入了三角形和正方形之间的低失真映射。这种映射产生了一种面积保留参数化,可用于在任意三角形中以均匀密度对随机点进行采样。

Sampling the GGX Distribution of Visible Normals

Analytical Calculation of the Solid Angle Subtended by an Arbitrarily Positioned Ellipsoid to a Point Source

A note on track-length sampling with non-exponential distributions

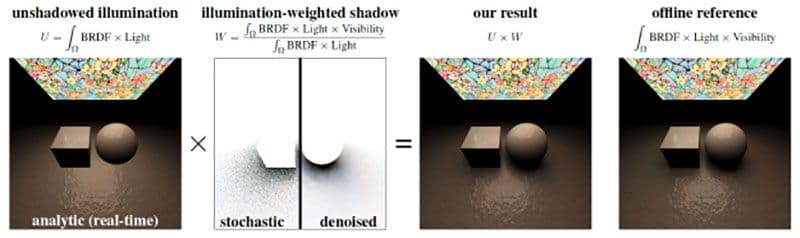

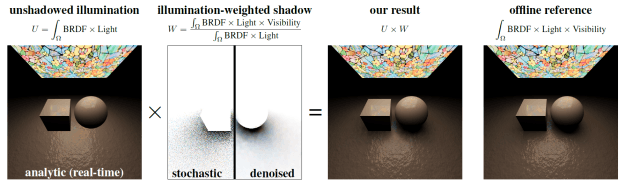

Combining Analytic Direct Illumination and Stochastic Shadows

Non-Periodic Tiling of Procedural Noise Functions

High-Performance By-Example Noise using a Histogram-Preserving Blending Operator

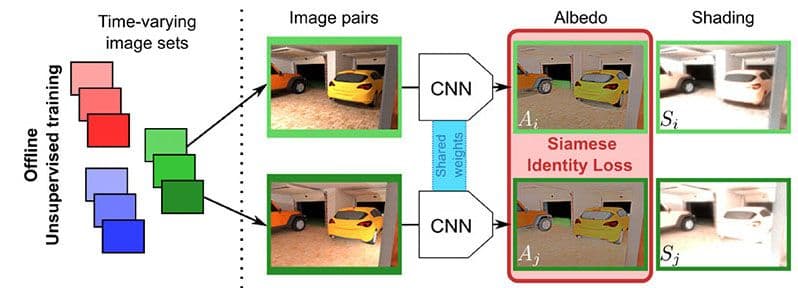

Unsupervised Deep Single-Image Intrinsic Decomposition using Illumination-Varying Image Sequences

2018-2016

Efficient Rendering of Layered Materials using an Atomic Decomposition with Statistical Operators

An Adaptive Parameterization for Material Acquisition and Rendering

Stochastic Shadows

Adaptive GPU Tessellation with Compute Shaders

Real-Time Line- and Disk-Light Shading with Linearly Transformed Cosines

Microfacet-based Normal Mapping for Robust Monte Carlo Path Tracing

A Spherical Cap Preserving Parameterization for Spherical Distributions

A Practical Extension to Microfacet Theory for the Modeling of Varying Iridescence

Linear-Light Shading with Linearly Transformed Cosines