Le PlayerLoop de Unity contient des fonctions pour interagir avec le cœur du moteur de jeu. Cette structure comprend un certain nombre de systèmes qui gèrent l'initialisation et les mises à jour par image. Tous vos scripts dépendront de ce PlayerLoop pour créer le gameplay. Lors du profilage, vous verrez le code utilisateur de votre projet sous le PlayerLoop – avec des composants Éditeur sous le EditorLoop.

Il est important de comprendre l'ordre d'exécution de la FrameLoop de Unity. Chaque script Unity exécute plusieurs fonctions d'événements dans un ordre prédéterminé. Apprenez la différence entre Awake, Démarrer, Mettre à jour, et d'autres fonctions qui créent le cycle de vie d'un script pour renforcer les performances.

Quelques exemples incluent l'utilisation de FixedUpdate au lieu de Mettre à jour lors du traitement d'un Rigidbody ou l'utilisation de Awake plutôt que Démarrer pour initialiser des variables ou l'état du jeu avant le début du jeu. Utilisez ces éléments pour minimiser le code qui s'exécute à chaque image. Awake est appelé une seule fois pendant la durée de vie de l'instance de script et toujours avant les fonctions Démarrer. Cela signifie que vous devriez utiliser Démarrer pour traiter des objets que vous savez pouvoir communiquer avec d'autres objets, ou les interroger une fois qu'ils ont été initialisés.

Consultez le diagramme de flux du cycle de vie du script pour l'ordre d'exécution spécifique des fonctions d'événements.

Si votre projet a des exigences de performance exigeantes (par exemple, un jeu en monde ouvert), envisagez de créer un gestionnaire de mise à jour personnalisé en utilisant Update, LateUpdate, ou FixedUpdate.

Un modèle d'utilisation courant pour Mettre à jour ou LateUpdate est d'exécuter la logique uniquement lorsque certaines conditions sont remplies. Cela peut entraîner un certain nombre de rappels par image qui n'exécutent effectivement aucun code sauf pour vérifier cette condition.

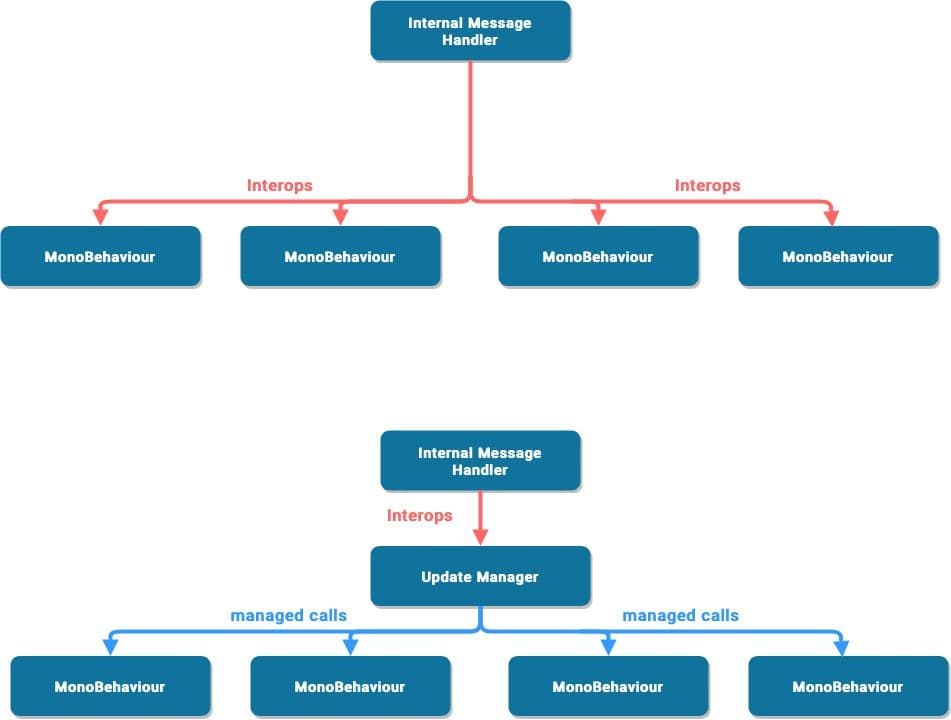

Chaque fois que Unity appelle une méthode de message comme Mettre à jour ou LateUpdate, elle effectue un appel d'interopérabilité – c'est-à-dire, un appel du côté C/C++ vers le côté C# géré. Pour un petit nombre d'objets, ce n'est pas un problème. Lorsque vous avez des milliers d'objets, cette surcharge commence à devenir significative.

Abonnez les objets actifs à ce gestionnaire de mise à jour lorsqu'ils ont besoin de rappels, et désabonnez-les lorsqu'ils n'en ont pas besoin. Ce modèle peut réduire de nombreux appels d'interopérabilité à vos objets MonoBehaviour.

Référez-vous aux techniques d'optimisation spécifiques au moteur de jeu pour des exemples d'implémentation.

Considérez si le code doit s'exécuter à chaque image. Vous pouvez déplacer la logique inutile hors de Update, LateUpdate et FixedUpdate. Ces fonctions d'événements Unity sont des endroits pratiques pour mettre du code qui doit se mettre à jour à chaque image, mais vous pouvez extraire toute logique qui n'a pas besoin de se mettre à jour avec cette fréquence.

N'exécutez la logique que lorsque les choses changent. N'oubliez pas d'utiliser des techniques telles que le modèle observateur sous forme d'événements pour déclencher une signature de fonction spécifique.

Si vous devez utiliser Update, vous pourriez exécuter le code toutes les n images. C'est une façon d'appliquer Time Slicing, une technique courante de distribution d'une charge de travail lourde sur plusieurs images.

Dans cet exemple, nous exécutons le ExampleExpensiveFunction une fois toutes les trois images.

Le truc est d'entrelacer cela avec d'autres travaux qui s'exécutent sur les autres images. Dans cet exemple, vous pourriez "planifier" d'autres fonctions coûteuses lorsque Time.frameCount % interval == 1 ou Time.frameCount % interval == 2.

Alternativement, utilisez une classe de gestionnaire de mise à jour personnalisée pour mettre à jour les objets abonnés toutes les n images.

Dans les versions Unity antérieures à 2020.2, GameObject.Find, GameObject.GetComponent, et Camera.main peuvent être coûteux, donc il est préférable d'éviter de les appeler dans les méthodes Update.

De plus, essayez d'éviter de placer des méthodes coûteuses dans OnEnable et OnDisable si elles sont appelées souvent. Appeler fréquemment ces méthodes peut contribuer à des pics de CPU.

Autant que possible, exécutez des fonctions coûteuses, telles que MonoBehaviour.Awake et MonoBehaviour.Start pendant la phase d'initialisation. Mettez en cache les références nécessaires et réutilisez-les plus tard. Consultez notre section précédente sur le Unity PlayerLoop pour l'ordre d'exécution des scripts en détail.

Voici un exemple qui démontre une utilisation inefficace d'un appel répété à GetComponent :

void Update()

{

Renderer myRenderer = GetComponent();

ExampleFunction(myRenderer);

}

Au lieu de cela, invoquez GetComponent une seule fois car le résultat de la fonction est mis en cache. Le résultat mis en cache peut être réutilisé dans Update sans aucun autre appel à GetComponent.

Lisez-en plus sur l'Ordre d'exécution des fonctions d'événement.

Les instructions de journalisation (en particulier dans Update, LateUpdate ou FixedUpdate) peuvent ralentir les performances, donc désactivez vos instructions de journalisation avant de créer une build. Pour faire cela rapidement, envisagez de créer un Attribut conditionnel avec une directive de prétraitement.

Par exemple, vous pourriez vouloir créer une classe personnalisée comme montré ci-dessous.

Générez votre message de journal avec votre classe personnalisée. Si vous désactivez le ENABLE_LOG préprocesseur dans les Paramètres du joueur > Symboles de définition de script, toutes vos instructions de journalisation disparaissent d'un coup.

Gérer des chaînes et du texte est une source courante de problèmes de performance dans les projets Unity. C'est pourquoi supprimer les instructions de journalisation et leur formatage de chaîne coûteux peut potentiellement être un grand gain de performance.

De même, les MonoBehaviours vides nécessitent des ressources, donc vous devriez supprimer les méthodes Update ou LateUpdate vides. Utilisez des directives de préprocesseur si vous utilisez ces méthodes pour des tests :

#if UNITY_EDITOR

void Update()

{

}

#endif

Ici, vous pouvez utiliser Update dans l'éditeur pour tester sans surcharge inutile dans votre build.

Cet article de blog sur 10 000 appels Update explique comment Unity exécute le Monobehaviour.Update.

Utilisez les options Trace de pile dans les Paramètres du joueur pour contrôler quel type de messages de journal apparaissent. Si votre application enregistre des erreurs ou des messages d'avertissement dans votre build de production (par exemple, pour générer des rapports de plantage dans la nature), désactivez les traces de pile pour améliorer les performances.

En savoir plus sur la journalisation de la trace de pile.

Unity n'utilise pas de noms de chaînes pour adresser Animator, Material ou Shader en interne. Pour des raisons de rapidité, tous les noms de propriétés sont hachés en Property IDs, et ces ID sont utilisés pour adresser les propriétés.

Lors de l'utilisation d'une méthode Set ou Get sur un Animator, Material ou Shader, utilisez plutôt la méthode à valeur entière que les méthodes à valeur chaîne. Les méthodes à valeur chaîne effectuent un hachage de chaîne puis transmettent l'ID haché aux méthodes à valeur entière.

Utilisez Animator.StringToHash pour les noms de propriétés Animator et Shader.PropertyToID pour les noms de propriétés Material et Shader.

Lié à cela est le choix de la structure de données, qui impacte les performances lorsque vous itérez des milliers de fois par image. Suivez le guide MSDN sur les structures de données en C# comme guide général pour choisir la bonne structure.

Instantiate et Destroy peuvent générer des pics de garbage collection (GC). C'est généralement un processus lent, donc plutôt que d'instancier et de détruire régulièrement des GameObjects (par exemple, tirer des balles d'un pistolet), utilisez des pools d'objets préalloués qui peuvent être réutilisés et recyclés.

Créez les instances réutilisables à un moment donné dans le jeu, comme pendant un écran de menu ou un écran de chargement, lorsque le pic de CPU est moins perceptible. Suivez ce "pool" d'objets avec une collection. Pendant le jeu, activez simplement la prochaine instance disponible lorsque nécessaire, et désactivez les objets au lieu de les détruire, avant de les renvoyer au pool. Cela réduit le nombre d'allocations gérées dans votre projet et peut prévenir les problèmes de GC.

De même, évitez d'ajouter des composants à l'exécution ; Invoking AddComponent a un certain coût. Unity doit vérifier les doublons ou d'autres composants requis chaque fois qu'il ajoute des composants à l'exécution. Instancier un Prefab avec les composants souhaités déjà configurés est plus performant, donc utilisez cela en combinaison avec votre Object Pool.

Lié à cela, lors du déplacement des Transforms, utilisez Transform.SetPositionAndRotation pour mettre à jour à la fois la position et la rotation en une seule fois. Cela évite le surcoût de modifier un Transform deux fois.

Si vous devez instantiate a GameObject à l'exécution, parent et repositionnez-le pour l'optimisation, voir ci-dessous.

Pour plus d'informations sur Object.Instantiate, consultez le Scripting API.

Apprenez à créer un système simple de Pooling d'objets dans Unity ici.

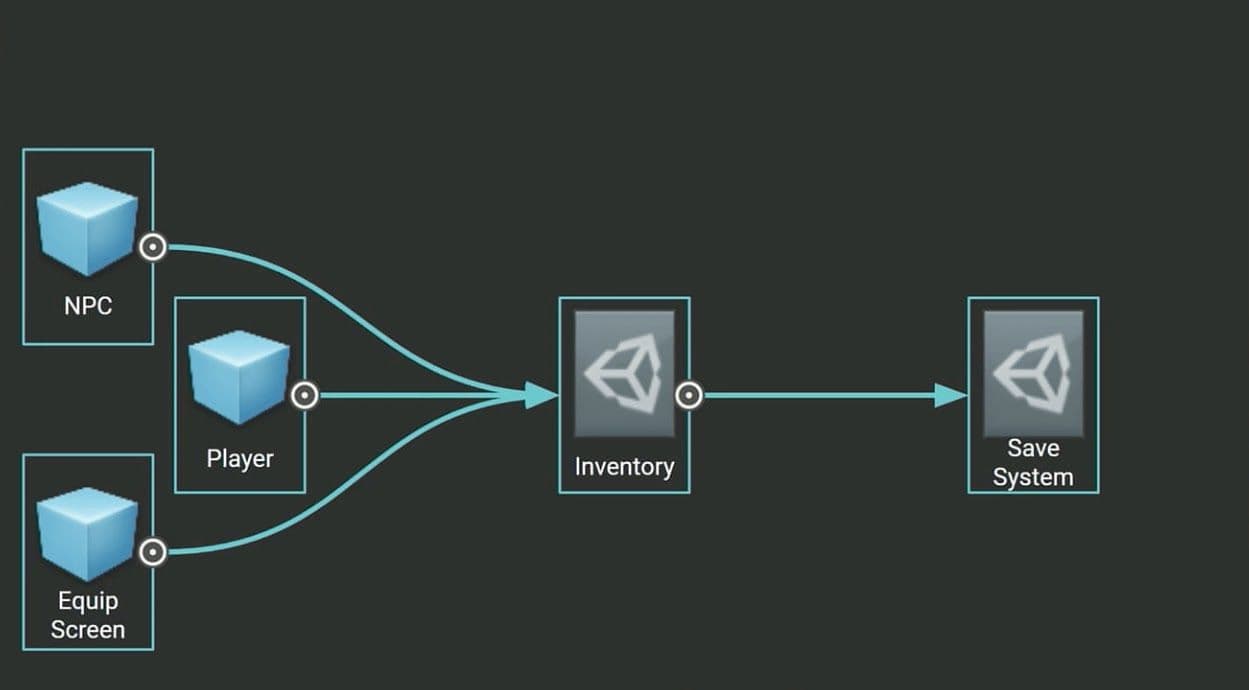

Stockez des valeurs ou des paramètres immuables dans un ScriptableObject au lieu d'un MonoBehaviour. Le ScriptableObject est un actif qui vit à l'intérieur du projet. Il n'a besoin d'être configuré qu'une seule fois et ne peut pas être directement attaché à un GameObject.

Créez des champs dans le ScriptableObject pour stocker vos valeurs ou paramètres, puis référencez le ScriptableObject dans vos MonoBehaviours. Utiliser des champs du ScriptableObject peut éviter la duplication inutile de données chaque fois que vous instanciez un objet avec ce MonoBehaviour.

Regardez ce tutoriel d'introduction aux ScriptableObjects et trouvez la documentation pertinente ici.

L'un de nos guides les plus complets à ce jour rassemble plus de 80 conseils pratiques sur la façon d'optimiser vos jeux pour PC et console. Créés par nos ingénieurs experts en succès et solutions accélérées, ces conseils approfondis vous aideront à tirer le meilleur parti de Unity et à améliorer les performances de votre jeu.