- Getting started with the High Definition Render Pipeline

- Anti-aliasing, volumes, and exposure with the High Definition Render Pipeline

- Lights and shadows with HDRP

- Reflections and real-time lighting effects

- Post-processing and ray tracing with HDRP

- Introduction to the VFX Graph in Unity

- URP Project & Quality settings: Learn from the URP 3D Sample

- 10 ways to speed up your programming workflows in Unity with Visual Studio 2019

- Understanding Unity’s serialization language, YAML

- Speed up your programmer workflows

- Formatting best practices for C# scripting in Unity

- Naming and code style tips for C# scripting in Unity

- Create modular and maintainable code with the observer pattern

- Develop a modular, flexible codebase with the state programming pattern

- Use object pooling to boost performance of C# scripts in Unity

- Build a modular codebase with MVC and MVP programming patterns

- How to use the factory pattern for object creation at runtime

- Use the command pattern for flexible and extensible game systems

- How to use the Model-View-ViewModel pattern

- How to use the strategy pattern

- How to use the Flyweight pattern

- How to use the Dirty Flag pattern

- A guide on using the new AI Navigation package in Unity 2022 LTS and above

- Get started with the Unity ScriptableObjects demo

- Use ScriptableObject-based events with the observer pattern

- Use ScriptableObject-based enums in your Unity project

- Separate game data and logic in Unity with ScriptableObjects

- Tools for profiling and debugging

- Performance profiling tips for game developers

- Optimize your mobile game performance: Expert tips on graphics and assets

- Optimize your mobile game performance: Get expert tips on physics, UI, and audio settings

- Helpful tips on advanced profiling

- Profiling in Unity 2021 LTS: What, when, and how

- How to optimize your game with the Profile Analyzer

- Performance optimization for high-end graphics

- Managing GPU usage for PC and console games

- Performance optimization: Project configuration and assets

- Tips for performance optimization in Unity: Programming and code architecture

- How to troubleshoot imported animations in Unity

- Tips for building animator controllers in Unity

- Mobile optimization tips for technical artists – Part I

- Mobile optimization tips for technical artists – Part II

- Systems that create ecosystems: Emergent game design

- Unpredictably fun: The value of randomization in game design

- Introduction to Asset Manager transfer methods in Unity

- Build a simple product configurator in Unity in one hour or less

- Creator Series | Data ingestion: Manage CAD, BIM and Point Cloud data

- Unlock CAD & Mesh Data with Unity Asset Transformer Studio

- Ingesting 3D data into Unity Industry with Unity Asset Transformer Toolkit

Game development

- The eight factors of multiplayer game development

- How to manage network latency in multiplayer games

- Nine use cases for Unity’s Game Backend tools

Player engagement

- Improve retention at every stage of the player lifecycle

- How to apply A/B testing to games

Unity Ads

- How to monetize effectively and sustainably in mobile games

Download this e-book to learn about all the capabilities included in HDRP in Unity 6 and 6.1.

Read this major new guide that focuses on UI Toolkit features, with sections covering Unity 6 capabilities like data binding, localization, custom controls, and much more.

Read this e-book that assembles tips and tricks from professional developers for deploying ScriptableObjects in production.

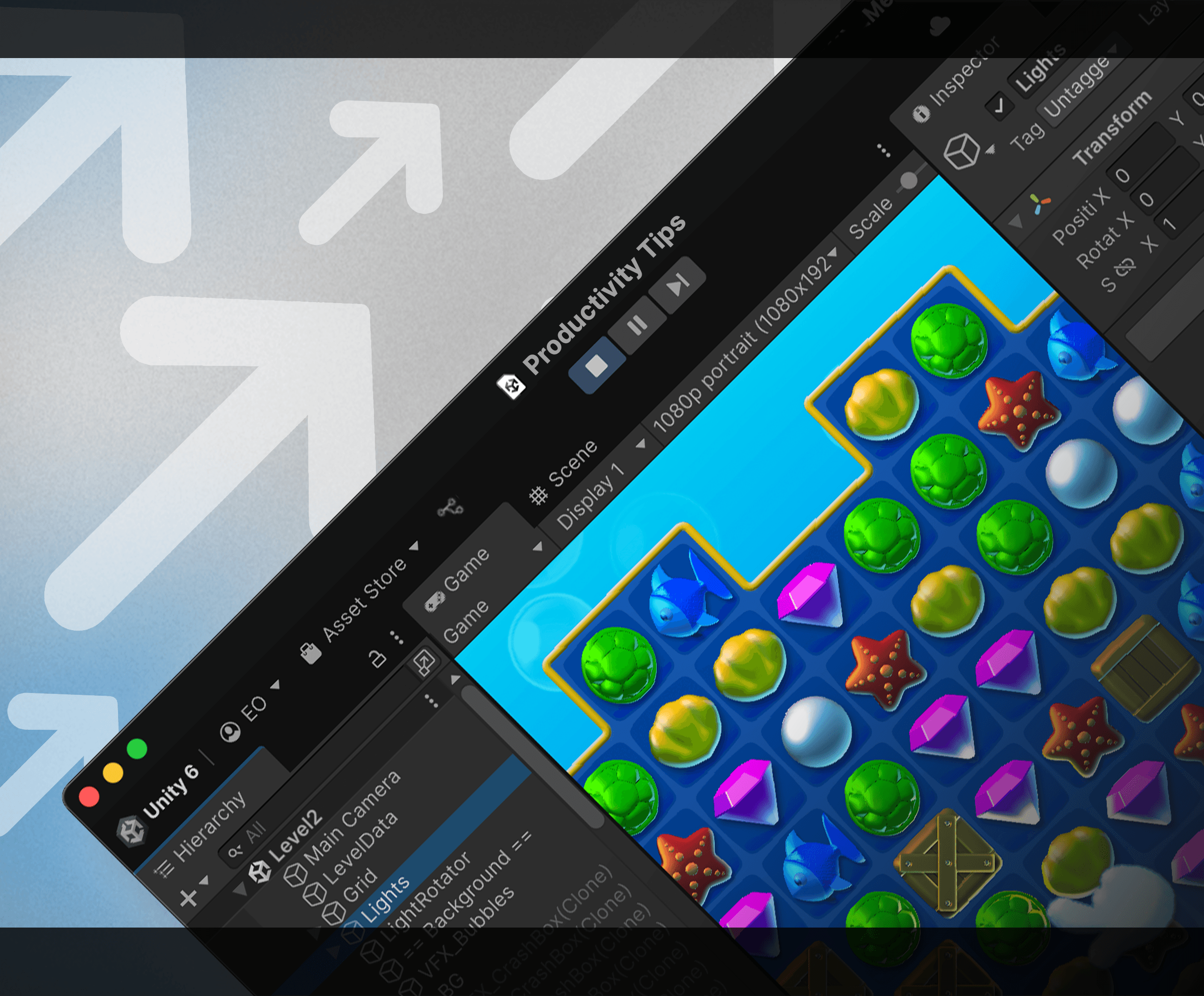

This updated 100+ pages guide offers tips to speed up your workflows throughout every stage of game development, and it's useful whether you're just starting out or if you've been a Unity developer for years.

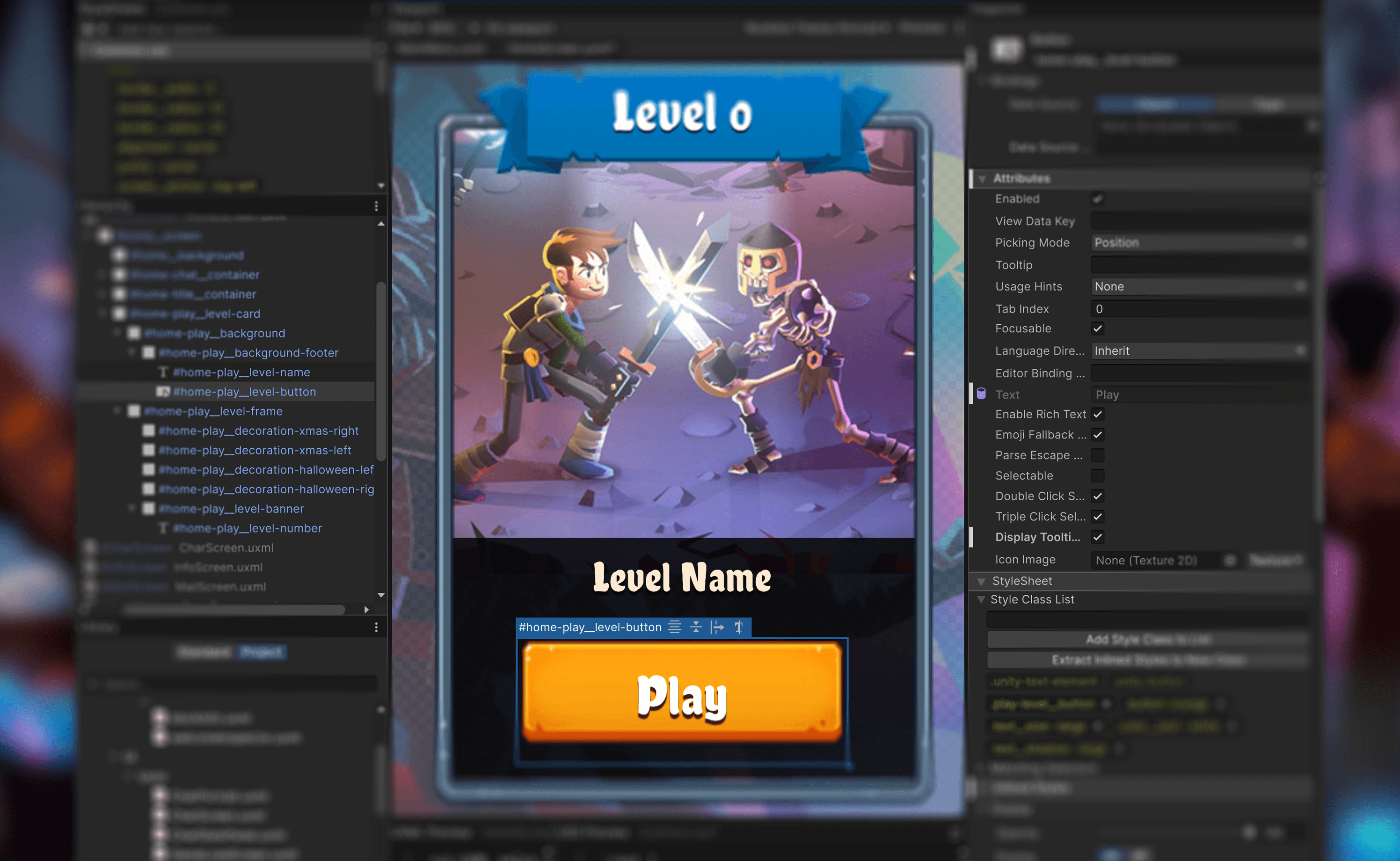

This official UI Toolkit project provides game interfaces that showcase UI Toolkit and UI Builder workflows for runtime games. Explore this project with its companion e-book for more great tips.

QuizU is an official Unity sample demonstrating various design patterns and project architecture including MVP, state pattern, managing menu screens and much more using UI Toolkit.

Gem Hunter Match is an official Unity cross-platform sample project that showcases the capabilities of 2D lighting and visual effects in the Universal Render Pipeline (URP) in Unity 2022 LTS.